HTTP (Hypertext Transfer Protocol) is the application-layer transfer protocol that the World Wide Web is based on. Originally conceived in the late 80s as a single-line text-based protocol, and documented initially as HTTP/0.9, its first full-featured iteration (v. 1.0) was documented in RFC 1945 in 1996.

As the use and expectations of the internet grew, so did the need to improve HTTP itself. Version 1.1 was documented in RFC 2068 in 1997 and RFC 2616 in 1999, with subsequent RFCs (7230-7235) in 2014 — a whole decade and a half later! — documenting message syntax/routing; semantics/content; conditional and range requests; caching; and authentication.

The current version of HTTP is HTTP/2. It was based on Google’s SPDY project, and it was the first major overhaul of the protocol, standardized in RFC 7540 in 2015, with RFC 7541 introducing Header Compression (HPACK) the same year.

A mere four years after the introduction of HTTP/2, a new standard based on Google’s experimental QUIC protocol begun to emerge: HTTP/3. Its purpose: to increase speed and security of user interactions with websites and APIs.

In October 2020, before entering the RFC stage, the documents describing HTTP/3 (and QUIC) entered the IETF Last Call phase of the Internet-Draft stage. Once HTTP/3 is finally nailed down as a standard, however, is there still a place for HTTP/2? This article describes and compares the two versions of the protocol, and gives some suggestions of where each finds suitable applications.

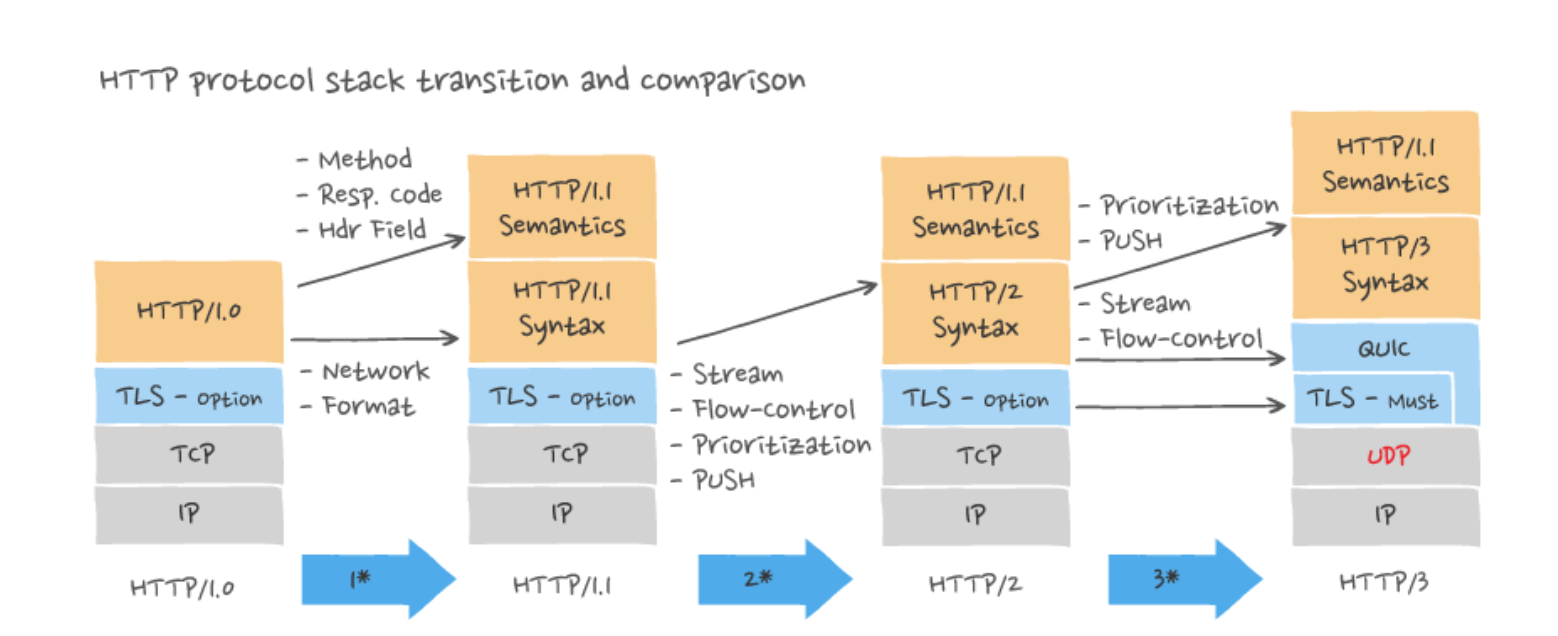

HTTP protocol stack transition and comparison

Comparo of HTTP protocol stack changes from HTTP/1.1 to HTTP/3

The background to HTTP/2

HTTP/2 was created to get around a number of problems arising from the unstructured evolution of HTTP/1.1, most of them performance-related.

Many of the issues arose due to the inherent limitations of HTTP/1.1, boiling down to response times increasing with traffic:

HTTP Head-of-line blocking (HTTP HoL) — HTTP HoL causes an increase in response time when a sequence of packets is held up by a slow/large packet clogging the artery ahead of them.

Protocol overheads — Server and client exchange additional request and response metadata, with repeated transmissions of headers and cookies building up and slowing down responses.

TCP slow start — As a congestion control measure, the protocol repeatedly probes the network to figure out the available capacity; before managing to reach full capacity, the multiple small transfers can cause lag.

Developers tried to address these problems and limitations with workarounds such as domain sharding, pipelining, and using “cookieless” domains, but these often led to compatibility and interoperability issues. Clearly, the aging HTTP/1.1 standard needed updating.

In 2009, Google announced SPDY, an experimental protocol, as a structured approach to solving the problems with HTTP/1.x. The HTTP Working Group noted the success Google had with meeting increased performance goals with SPDY. In November 2012, a call for proposal was made for HTTP/2, with the SPDY specification adopted as the starting point.

Over the next few years, HTTP/2 and SPDY co-evolved with SPDY as the experimental branch. HTTP/2 was published as an international standard in May 2015 as RFC 7540.

The need for HTTP/3

Over the years, HTTP has continued evolving, from a half a decade of version 0.9, a year of version 1.0, through roughly 15 years of 1.1, and eventually arriving at version 2 in 2014. At every iteration, new features have been added to the protocol to address a multitude of needs, such as application layer requirements, security considerations, session handling, and media types. For an in-depth look at HTTP/2 and its evolution from HTTP/1.0, read the HTTP humble origins section in our HTTP evolution – HTTP/2 Deep Dive.

During this evolution, the underlying transport mechanism of HTTP has by-and-large remained the same. However, as internet traffic exploded with massive public uptake of mobile device technology, the freshly unboxed HTTP/2 has struggled to provide a smooth, transparent web browsing experience. It strains under the volume and speed of modern internet traffic, especially under the ever-increasing demands of realtime applications and their users. This has resulted in a number of caveats when using this version of the protocol, exposing obvious opportunities for improvement.

Multiplexing, load spikes, and request prioritization

Once upon a time, the realtime whiteboarding/charting company Lucidchart ran into an unexpected problem after turning on HTTP/2 on their load balancers (LBs).

Long story short: they noticed a higher CPU load and slower response times on the servers behind those very LBs. HTTP/2 touted increased bandwidth efficiency, decreased latency, and request prioritization so something was not adding up. But what? Traffic appeared normal at first glance, and there were no code changes to blame for the strange behavior. But while the average number of requests was normal, the flow itself contained high-spike bursts of many simultaneous requests. Previous provisioning models failed to account for this kind of circumstance, and the result was that responses to requests timed out or were delayed.

The actual reason turned out to be that as long as users’ browsers were using HTTP/1.1, this effectively throttled the number of concurrent requests due to HTTP/1.1’s serial request processing nature, keeping traffic ordered and within predictable bounds. Turning on HTTP/2 allowed the possibility of unpredictable spikes because it features multiplexing, i.e. using a single connection to send concurrent requests. Batching requests is great for the client, but the simultaneous request start times and volume caused Lucidchart’s servers a major headache.

In the end, being able to use HTTP/2 on LucidChart’s servers required implementing non-trivial solutions like throttling the balancer and re-architecting the application. A lack of maturity of software for HTTP/2 and of server support for HTTP prioritization were additional gotchas. And some applications support HTTP/2 only over secure sockets (HTTPS) — an unnecessary and onerous architectural complication for secure internal networks.

Server push gets complicated

The HTTP/2 push feature can do more harm than good if not used with care. For example, a returning visitor may have a cached copy of files, in which case the server should not be pushing resources. Making the push cache-aware can solve this problem, but this comes with its own caveats and can quickly become complicated.

That pesky Head of Line Blocking again

HTTP/2 addressed HTTP-level head-of-line blocking problem by allowing resources to be sent multiplexed, broken up into chunks simultaneously over the same connection. However, TCP-level head-of-the line blocking can still occur as packets get lost and have to get resent in the correct order all over again.

How is HTTP/2 different from HTTP/3?

The aim of HTTP/3 is to provide fast, reliable, and secure web connections across all forms of devices by resolving transport-related issues of HTTP/2. To do this, it uses a different transport layer network protocol called QUIC, originally developed by Google.

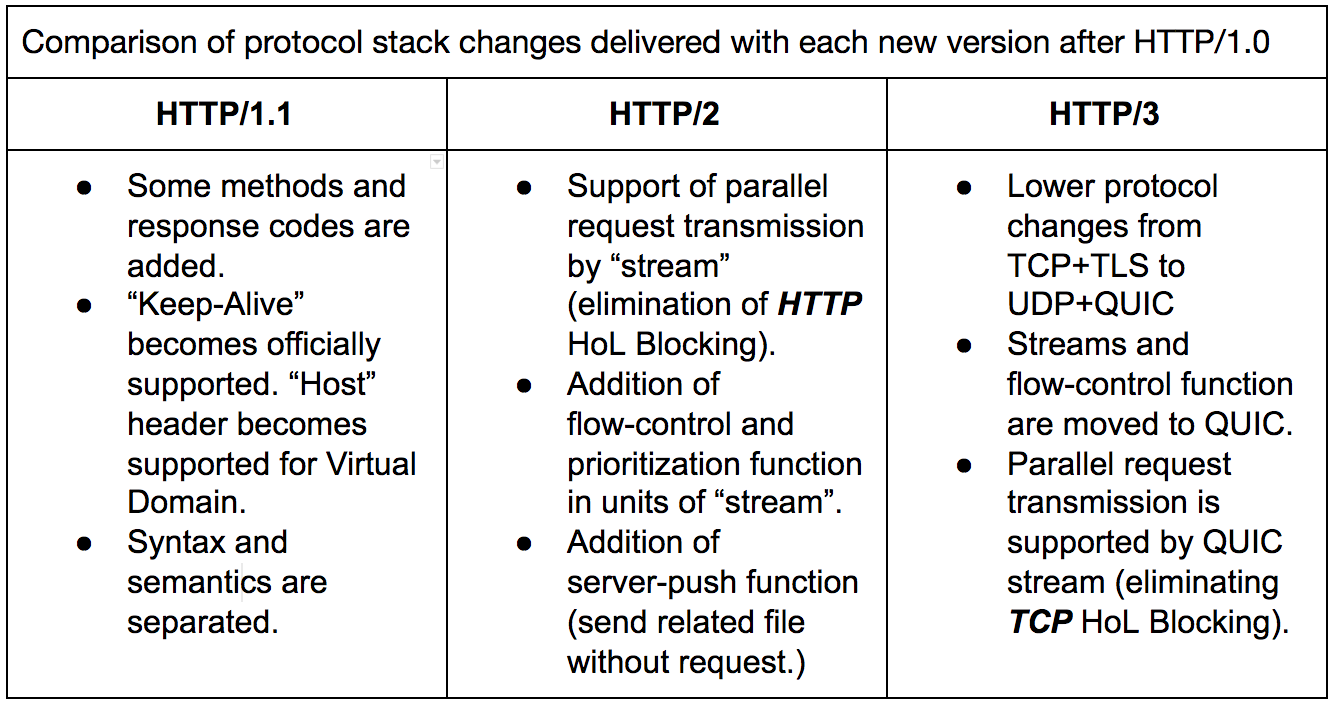

The fundamental difference between HTTP/2 and HTTP/3 is that HTTP/3 runs over QUIC, and QUIC runs over connectionless UDP instead of the connection-oriented TCP (used by all previous versions of HTTP).

Comparison of connection-oriented TCP vs. connectionless UDP, as used in QUIC

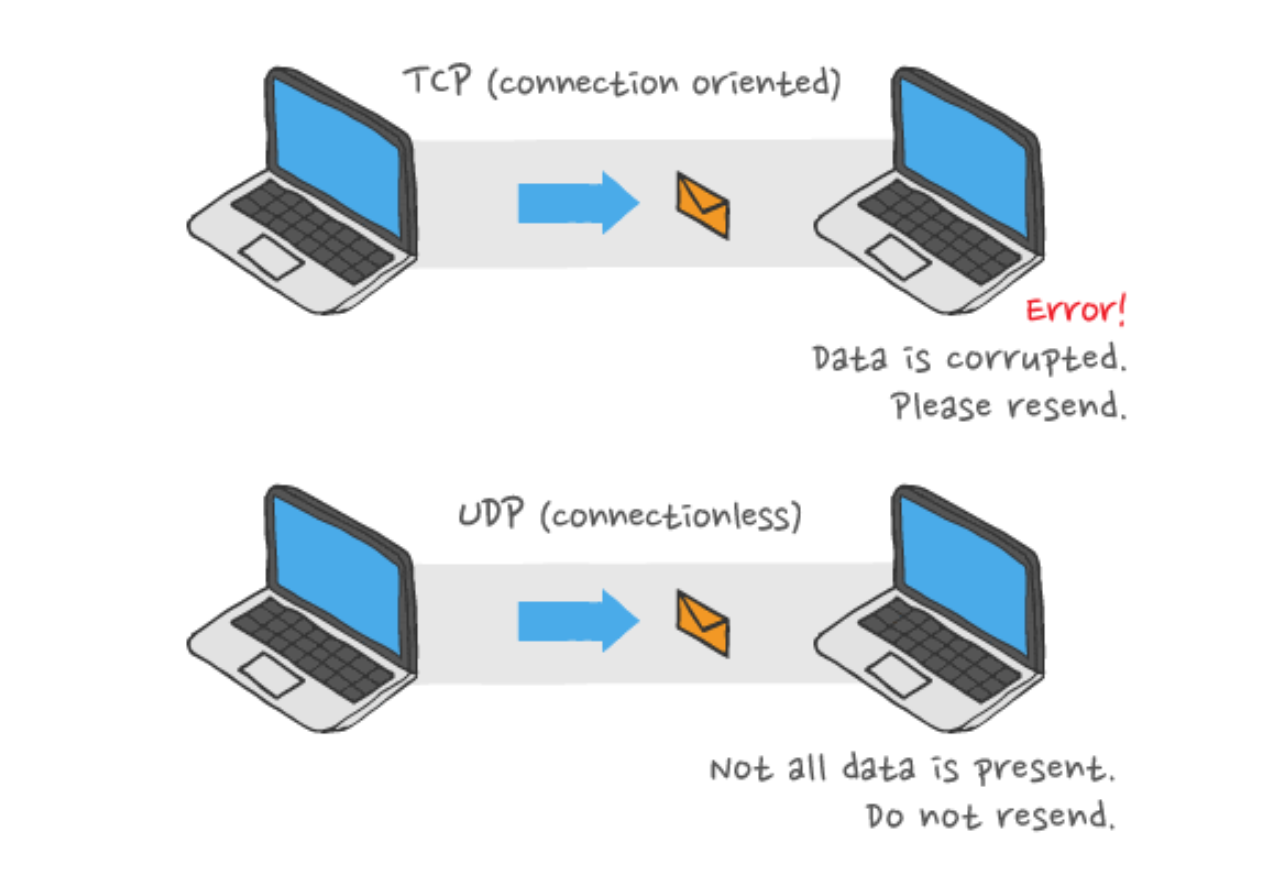

In terms of syntax and semantic structure, HTTP/3 is similar to HTTP/2. HTTP/3 engages in the same kinds of request/response message exchanges, with a data format that contains methods, headers, status codes, and body. However, a significant difference HTTP/3 introduces is in the stacking order of protocol layers on top of UDP, as shown in the following diagram.

Stacking order in HTTP/3 showing QUIC encompassing the security layer and part of the transport layer

Source: Kinsta

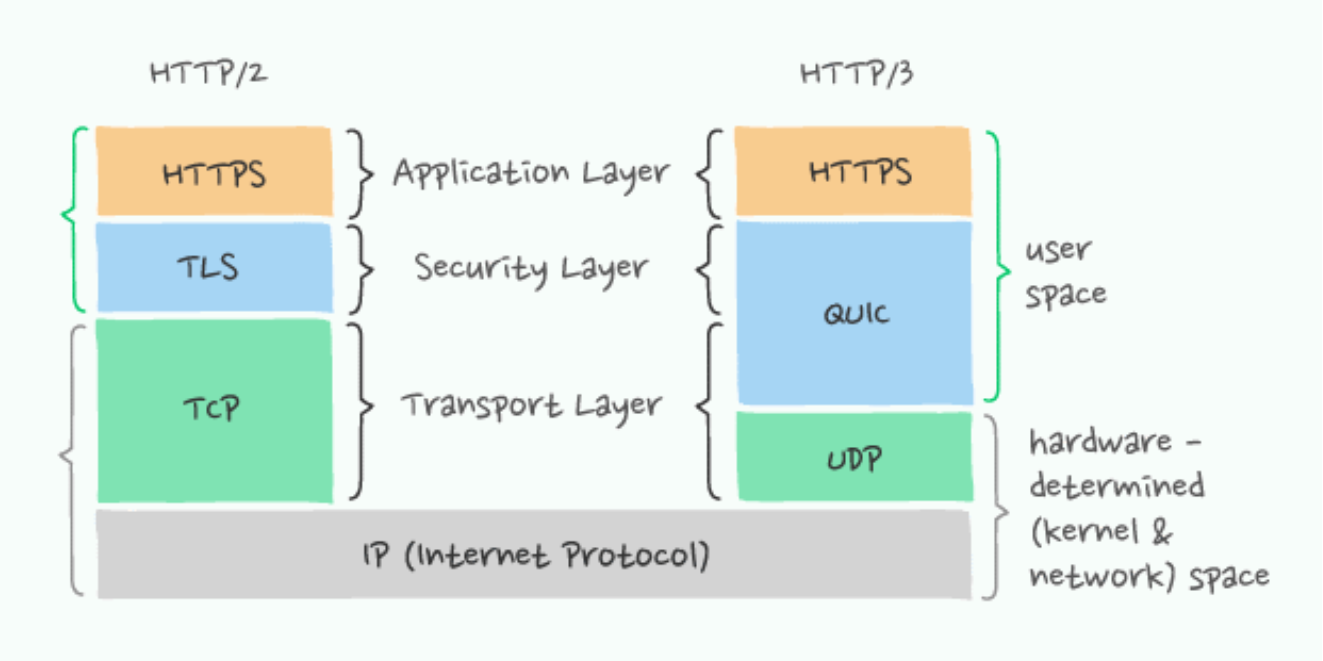

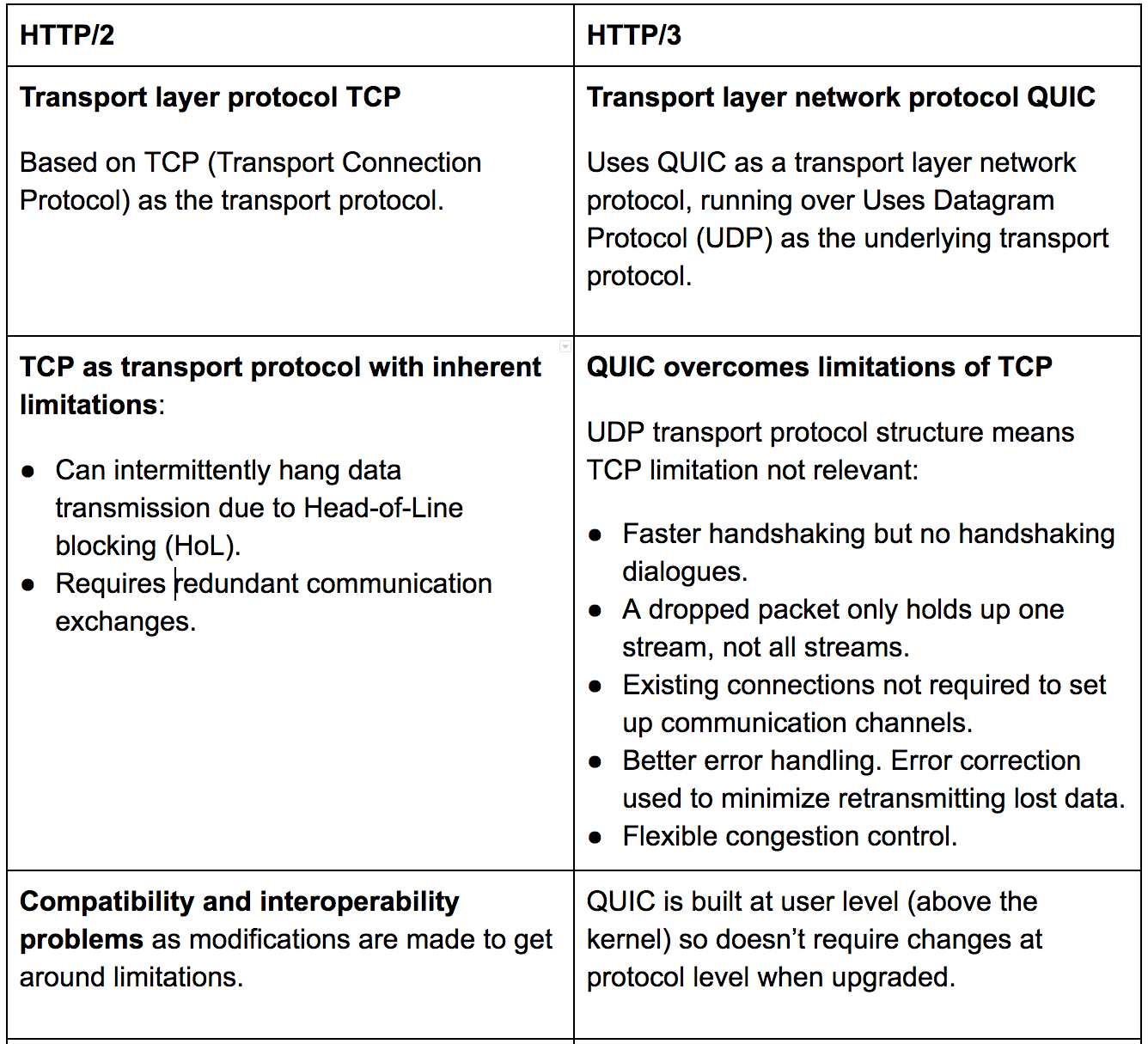

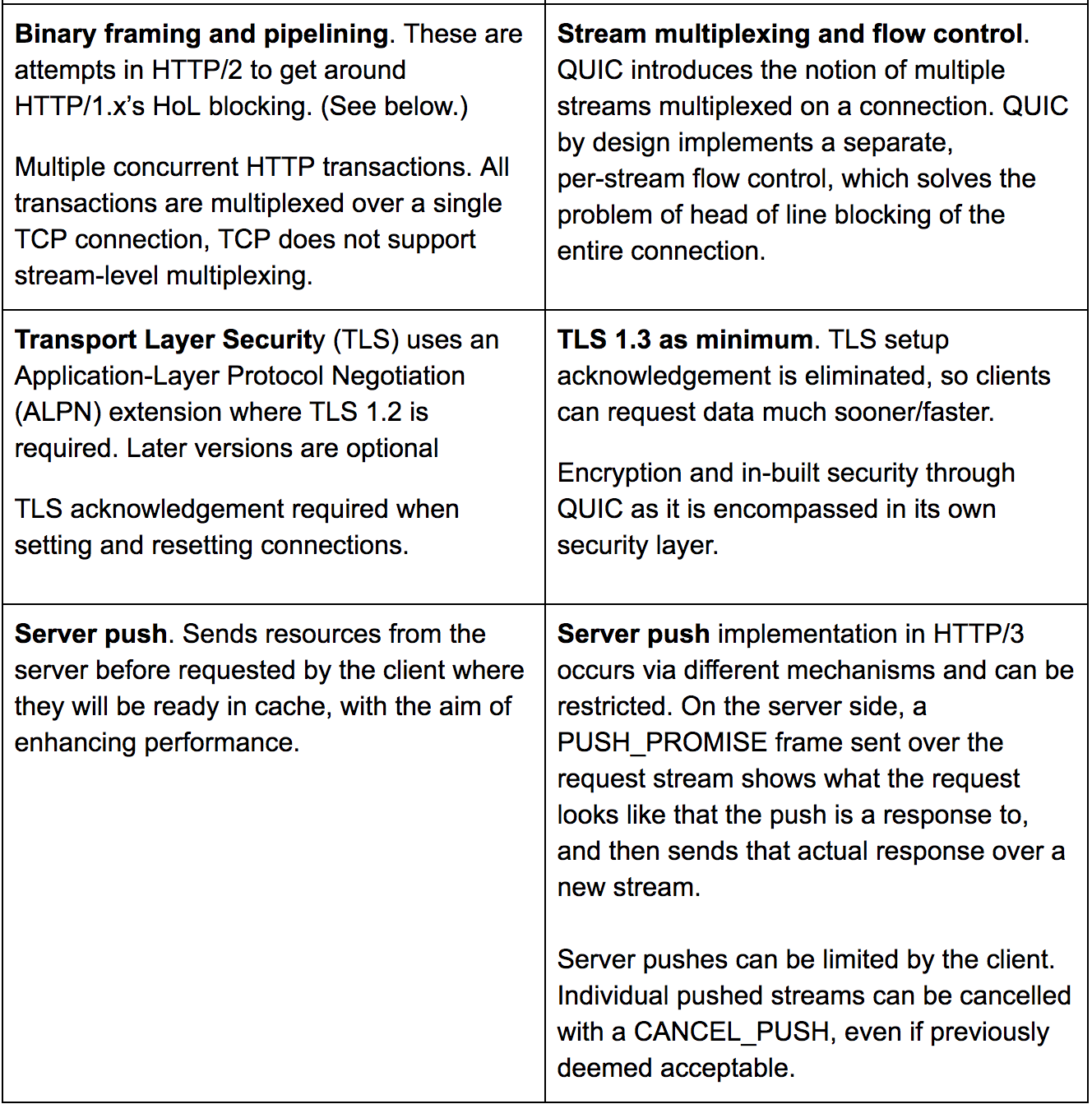

The following table compares the features and capabilities of HTTP/2 with HTTP/3:

Comparison table of features and capabilities of HTTP/2 and HTTP/3 Part 1/2

Comparison table of features and capabilities of HTTP/2 and HTTP/3 Part 2/2

An overview of HTTP/2

HTTP/2 is an extension of HTTP/1.1, not a replacement for it. The application semantics remain the same, with the same HTTP methods, status codes, URIs, and header fields.

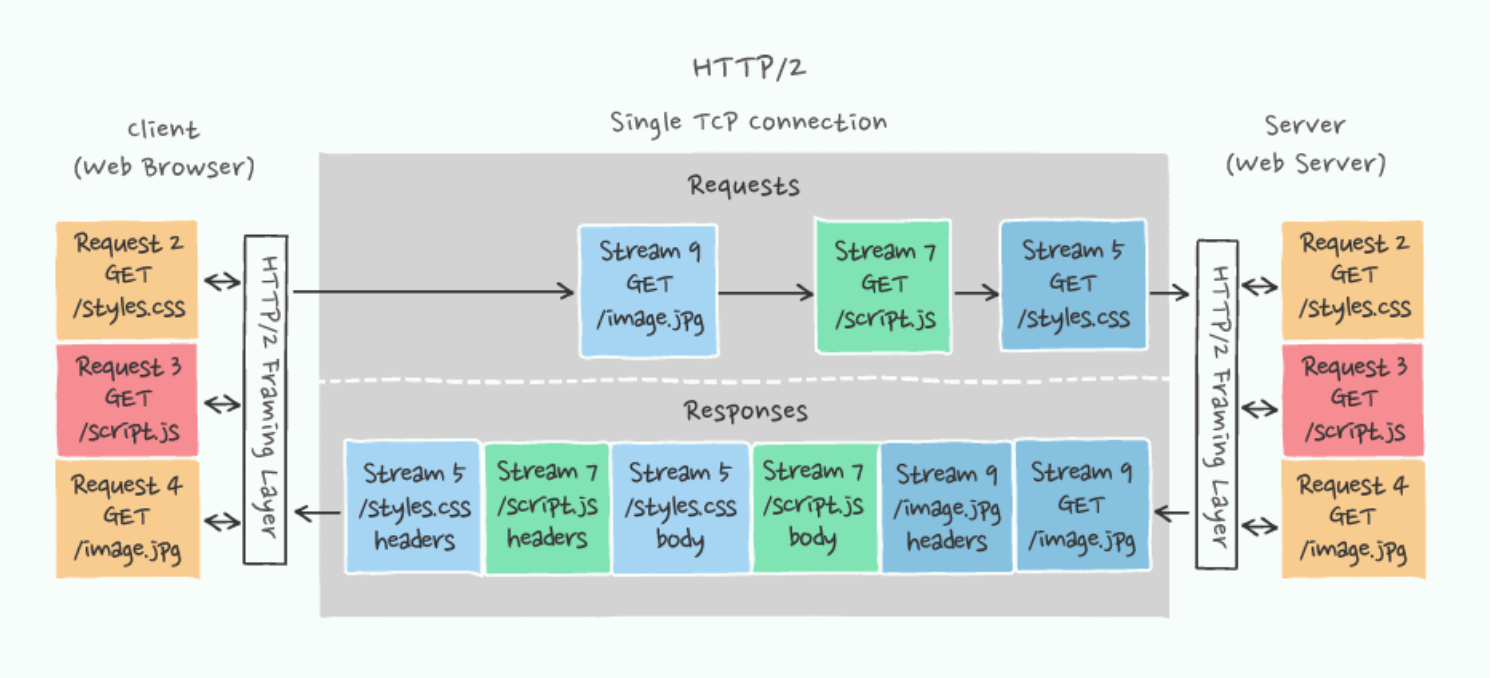

Every HTTP/2 connection starts as HTTP/1.1 and the connection upgrades if the client supports HTTP/2. HTTP/2 uses a single TCP connection between the client and the server, which remains open for the duration of the interaction.

Requests and responses between a client and a server over TCP, the transport protocol underlying HTTP/2.

Source https://labs.tadigital.com/index.php/2019/11/28/http-2-vs-http-3/

HTTP/2 introduced a number of features designed to improve performance:

A binary framing layer creating an interleaved communication stream.

Full multiplexing instead of forced ordering and thus blocking (which means it can use one connection for parallelism).

Header compression to reduce overhead.

Proactive “push” responses from servers into client caches.

HTTP/2: Pros and cons

All browsers support HTTP/2 protocol over HTTPS with the installation of an SSL certificate.

HTTP/2 allows the client to send all requests concurrently over a single TCP connection. Theoretically, the client should receive the resources faster.

TCP is a reliable, stable connection protocol.

Concurrent requests can increase the load on the servers. HTTP/2 servers can receive requests in large batches, which can lead to requests timing out. The issue of server load spiking can be solved by inserting a load balancer or a proxy server, which can throttle the requests.

Server support for HTTP/2 prioritization is not yet mature. Software support is still evolving. Some CDNs or load balancers may not support prioritization properly.

The HTTP/2 push feature can be tricky to implement correctly.

HTTP/2 addressed HTTP head-of-line blocking, but TCP-level blocking can still cause problems.

What use cases is HTTP/2 suitable for?

HTTP/2 supports all use cases of HTTP/1.x, wherever it is implemented in browsers, including desktop web browsers, mobile web browsers, web APIs, and web servers. However, it can also be used in proxy servers, reverse proxy servers, firewalls, and content delivery networks, and in the following circumstances:

With applications where response time isn’t critical.

With timing-critical applications, such as realtime messaging or streaming applications only if suitable adaptive technologies are used, such as WebSockets, Server-Sent Events (SSE), publish-subscribe (pub/sub) messaging.

Where a reliable connection is needed (a strength of TCP)

With constrained IoT devices.

An overview of HTTP/3

HTTP/3 enables fast, reliable, and secure connections. It encrypts Internet transport by default using Google’s QUIC protocol.

HTTP/3: Pros and cons

Introduction of new (different) transport protocol QUIC running over UDP means a decrease in latency both theoretically, and, for now, experimentally.

Because UDP does not perform error checking and correction in the protocol stack, it is suitable for use cases where these are either not required or are performed in the application. This means UDP avoids any associated overhead. UDP is often used in time-sensitive applications, such as realtime systems, which cannot afford to wait for packet retransmission and therefore tolerate some dropped packets

Transport layer ramifications. Transitioning to HTTP/3 involves not only a change in the application layer but also a change in the underlying transport layer. Hence adoption of HTTP/3 is a bit more challenging compared to its predecessor.

Reliability issues. UDP applications tend to lack reliability, it must be accepted there will be a degree of packet loss, re-ordering, errors, or duplication. It is up to the end-user applications to provide any necessary handshaking, such as real time confirmation that the message has been received.

HTTP/3 is not yet standardized.

What use cases is HTTP/3 suitable for?

Real-time applications such as online games, ad bidding, and Voice over IP, and where Real Time Streaming Protocol is used.

Broadcast information such as in many kinds of service discovery and shared information such as Precision Time Protocol and Routing Information Protocol. This is because UDP supports multicast.

IoT. HTTP/3 can address the issues of lossy wireless connection for such IoT use cases as mobile devices that gather data from attached sensors.

Big data. As HTTP/3 becomes sufficiently robust, hosted API services will be able to be streamed, and then monetized as the data is transformed into business intelligence.

Web-based virtual reality. VR applications demand more bandwidth to render intricate details of a virtual scene and will surely benefit from migrating to HTTP/3 powered by QUIC.

Microservices: faster (or no) handshakes means faster traversal of the microservices mesh.

Getting started with HTTP/3: Open source solutions for HTTP/3

The HTTP Working Group at IETF is still working on releasing the HTTP/3. So it’s not yet officially supported by web servers such as NGINX and Apache. However, several software libraries are available to experiment with this new protocol, and unofficial patches are also available.

Here is a list of the software libraries that support HTTP/3 and QUIC transport. Note that these implementations are based on one of the internet draft standard versions, which is likely to be superseded by a higher version leading up to the final standard published in an RFC.

quiche (https://github.com/cloudflare/quiche): quiche provides a low-level programming interface for sending and receiving packets over QUIC protocol. It also supports an HTTP/3 module for sending HTTP packets over its QUIC protocol implementation. It also provides an unofficial patch for NGINX servers to install and host a web server capable of running HTTP/3. Apart from this, additional wrappers are available for supporting HTTP/3 on Android and iOS mobile apps.

Aioquic (https://github.com/aiortc/aioquic): Aioquic is a pythonic implementation of QUIC. It also supports an inbuilt test server and client library for HTTP/3. Aioquic is built on top of the asyncio module, which is Python’s standard asynchronous I/O framework.

Neqo (https://github.com/mozilla/neqo): Neqo is Mozilla’s implementation of QUIC and HTTP/3 using Rust.

If you want to play around with QUIC, visit the page of open source implementation of QUIC protocol, maintained by the QUIC working group.

Note: HTTP/3 is not enabled in browsers by default, and you must enable it yourself.

Difficulties in implementing HTTP/3

Transitioning to HTTP/3 involves not only a change in the application layer but also a change in the underlying transport layer. The change in transport layer protocols may prove problematic. Security services are often built based on the premise that application traffic (HTTP for the most part) will be transported over TCP, the reliable, connection-oriented protocol. As such, adoption of HTTP/3 is somewhat more challenging than that of HTTP/2, which required only a change in the application layer alone.

The transport layer undergoes much scrutiny by the middleboxes in the network. These middleboxes, such as firewalls, proxies, NAT devices, perform a lot of deep packet inspection to meet their functional requirements. A firewall’s default packet filtering policies used to mostly (or only) TCP traffic can sometimes deprioritize or block prolonged UDP sessions.

Additionally, changing transport from TCP to UDP could have a significant impact on the ability of security infrastructure to parse and analyse application traffic simply because UDP is a datagram-based (packet) protocol and can be unreliable by definition. As a result, the introduction of a new transport mechanism introduces some complications for IT infrastructure and operations teams.

With the standardization efforts underway at IETF, these issues will eventually be ironed out. And given the positive results shown by Google’s early experiments with QUIC, there is overwhelming support in favour of HTTP/3, which will eventually force middlebox vendors to standardize.

Conclusion

HTTP/3 provides a good deal of the performance and security improvements wanted and needed for the next stage of the evolution of the internet. Despite that, and despite the fact that there’s a demonstrable need for enhancements to HTTP/2, the jury is still out on whether HTTP/3 will, in fact, end up being the wholesale improvement of the state of the HTTP/2 web as it stands and moves today.

Even though adoption by browsers and platforms has begun, HTTP/3 has yet to pass to the RFC stage (as of January 2021), and it is too early to make pronouncements on its uptake and implementation successes. As for realtime applications, well-established technologies such as WebSockets, Server-Sent Events (SSE), and publish-subscribe (pub/sub) are still perfectly capable of providing the required functionality in HTTP/2.

In addition, the move to HTTP/3 with the QUIC protocol and UDP (instead of TCP) leaves some questions of QoS in use cases with reliable internet connections. This adds to the uncertainty inherent in using an emerging protocol.

HTTP/3 is an exciting new development of the storied web protocol, and it will be the appropriate solution for many present or future use cases. But there is plenty of life left yet in the standard it aims to improve, and HTTP/2 is not going away any time soon.

About Ably

Ably is an enterprise-grade pub/sub messaging platform. We make it easy to efficiently design, quickly ship, and seamlessly scale critical realtime functionality delivered directly to end-users. Everyday we deliver billions of realtime messages to millions of users for thousands of companies.

We power the apps that people, organizations, and enterprises depend on everyday like Lightspeed System’s realtime device management platform for over seven million school-owned devices, Vitac’s live captioning for 100s of millions of multilingual viewers for events like the Olympic Games, and Split’s realtime feature flagging for one trillion feature flags per month.

We’re the only pub/sub platform with a suite of baked-in services to build complete realtime functionality: presence shows a driver’s live GPS location on a home-delivery app, history instantly loads the most recent score when opening a sports app, stream resume automatically handles reconnection when swapping networks, and our integrations extend Ably into third-party clouds and systems like AWS Kinesis and RabbitMQ. With 25+ SDKs we target every major platform across web, mobile, and IoT.

Our platform is mathematically modeled around Four Pillars of Dependability so we’re able to ensure messages don’t get lost while still being delivered at low latency over a secure, reliable, and highly available global edge network.

Take our APIs for a spin and see why developers from startups to industrial giants choose to build on Ably to simplify engineering, minimize DevOps overhead, and increase development velocity.

Further reading

Recommended Articles

The complete guide to MQTT

MQTT is a pub/sub network protocol especially built to enable efficient communication to and from Internet of Things (IoT) devices.

Building realtime apps with Flutter and WebSockets: client-side considerations

Learn about the many challenges of implementing a dependable client-side WebSocket solution for Flutter apps.

WebRTC vs. WebSocket: Key differences and which to use

We compare WebRTC with WebSocket. Discover how they are different, their pros & cons, and their use cases.