We all expect online experiences to happen in realtime. Messages should arrive instantly, dashboards should deliver business metrics as they happen, and live sports scores should broadcast to fans around the world in a blink.

This expectation is even higher for realtime chat and messaging, which is now embedded in everything from e-commerce platforms to online gaming. But building a competitive instant messaging or live chat app requires some heavy lifting—especially if you’re starting from scratch.

To help you hit the ground running, this article provides an overview of what live chat and instant messaging app development involve, including: the features you need, the challenges you can expect, and everything you need to know about delivering reliable, scalable realtime messaging.

The foundations of a successful chat or messaging app

Let’s start by taking a closer look at what every winning chat app and instant messaging platform has in common.

- Low latency data delivery: The promise of realtime means users expect to, and should, receive messages on their devices without delay. This relies on a combination of factors, including a high throughput, an efficient, low-latency messaging protocol (like WebSockets), and geographic proximity between users and servers.

- Message delivery guarantees: Chat and instant messaging rely on messages being delivered to the right client, in the right order, at the right time. Ensuring data integrity is difficult, but critical to prevent missed, unordered, or duplicate messages.

- Scalability: Having a highly available and resilient infrastructure allows a realtime chat or instant messaging app to grow in-line with its user base. The Pub/Sub messaging pattern is a good foundation for this, since it decouples the communication logic from the business logic - making your system easier to scale.

- Elasticity: A chat or instant messaging app that crashes when it gets more users than expected will never remain competitive. To succeed in the long term, your app has to be able to react to sudden changes and elastically scale to handle a high, unpredictable, and fluctuating number of concurrent users.

- Reliability: Chat and instant messaging need to be always available—even if something goes wrong. Building in redundancy ensures service continuity even in the face of failure, either at a regional level (instances and datacenters) or at a global level (network issues, outages, or even DDoS attacks).

- Features for a richer realtime messaging experience: Chat and instant messaging should be fun as well as functional. The standard is to send and receive messages, but the bar is now higher since chat apps like WhatsApp, Messenger, and Slack popularized features like emoji reactions, read receipts, and GIFs to upgrade the chat experience.

What instant messaging or chat app features to build

The idea of building a chat or instant messaging app is simple, but with the likes of Slack and WhatsApp setting the standard for realtime messaging, it’s not enough to just set up a sleek UI that lets users send and receive messages.

With that in mind, here are the features you need for a well-rounded experience that won’t push users to a better-equipped competitor.

Core instant messaging and chat features: What your app can't do without

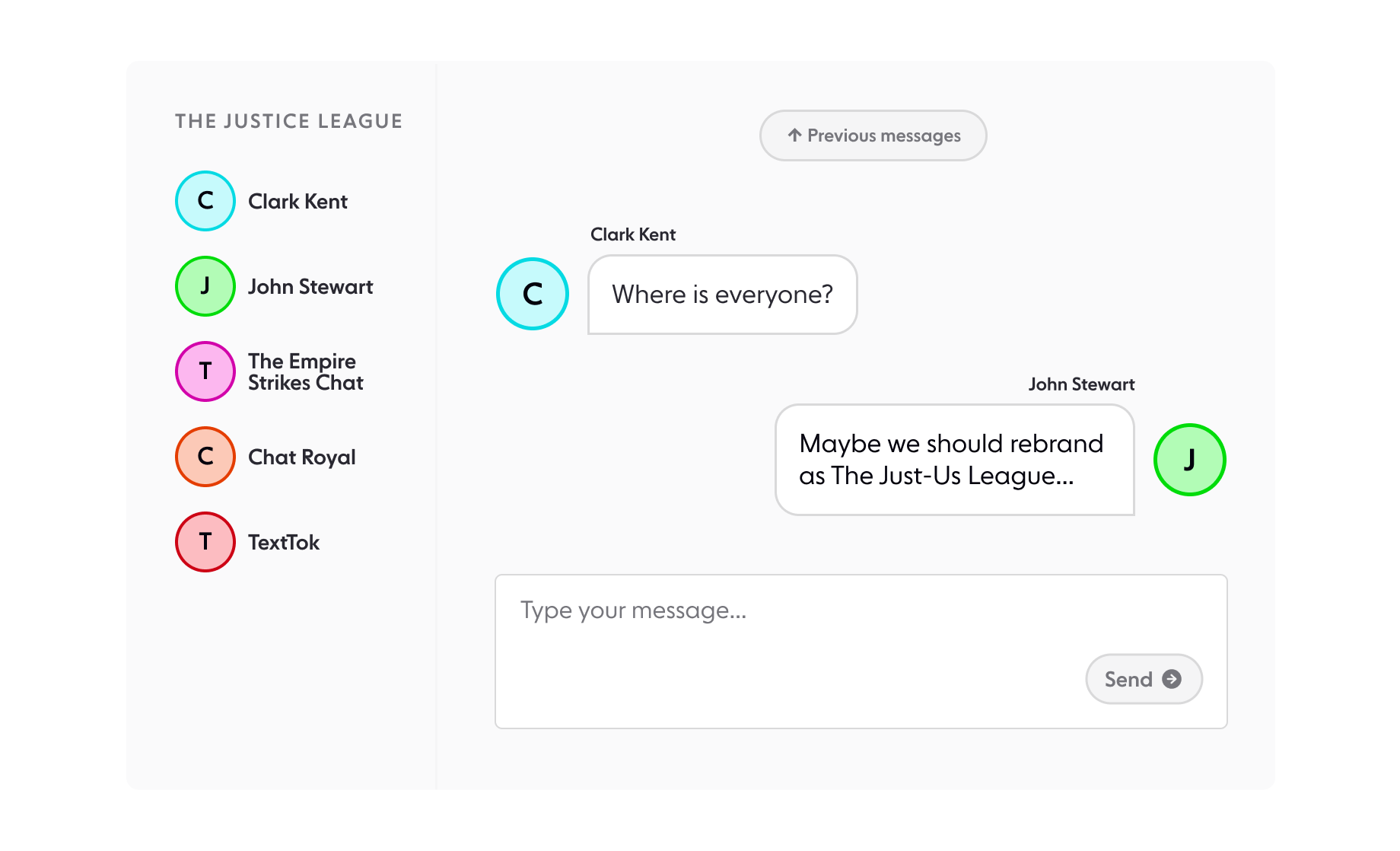

- Realtime messaging: Your chat app must enable users to join a chat to send and receive messages in realtime. Pub/Sub messaging is ideal for chat apps since it supports 1:1 messaging as well as 1:many, which is useful for group chat, chat rooms or channels.

- Message storage: Persist messages so users can retrieve old conversations. You can choose between different storage options and differing data retention policies, depending on the specifics of your use case.

- Authentication and authorization: Your chat app needs a way to manage access for chat participants. This can be through their social media account using a third-party login, or simply their email and phone number. You also need to consider managing permissions for different roles, like only allowing admins to moderate a group chat.

- Access to contacts: Your chat app must give users a simple way to import their contacts. It’s also a good idea to show which contacts are already registered in the app and give the option to send an invite to those who aren’t.

Enhanced instant messaging and chat features: Taking your app beyond the basics

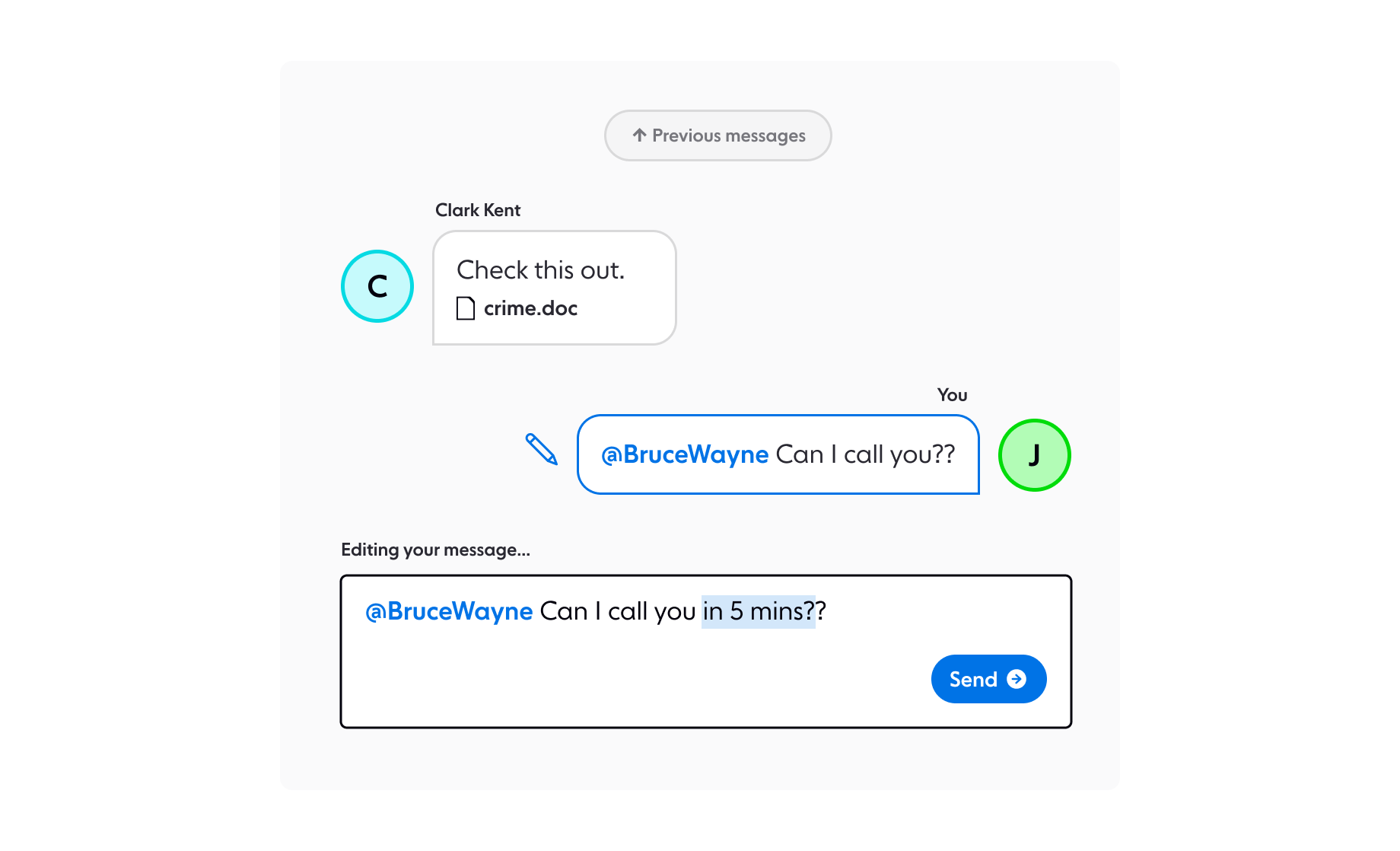

- Edit/delete messages: Your chat app should give users the option to edit or unsend a published message. This means thinking about the most suitable data structures for searchability, and making sure the message payload is properly updated and stored.

- Complex text: Your chat app should be able to interpret text that triggers a specific action. For example, URLs should become clickable or show a media thumbnail. A name tagged with @ should be highlighted and automatically send the tagged user a notification.

- File sharing: Adding support for different file formats to your instant messaging or chat app will enable users to send photos, videos and audio to liven up the conversation. This is particularly important for instant messaging apps, where participants are engaging in social interactions. Common protocols for this include WebRTC, WebSockets, and IRC.

- Audio/video calling: If you want your chat or instant messaging app to emulate the likes of WhatsApp, then you should enlist protocols like WebRTC to enable video calls within your chat app - or integrate third-party APIs that handle everything for you.

- Push notifications: Although the in-app experience is important, ensuring that users are aware of new messages even if they're not using the app is key to engagement and user communication. This can be achieved by sending notifications to users’ devices.

Rich instant messaging and chat UI and UX features: Upgrading the in-app experience

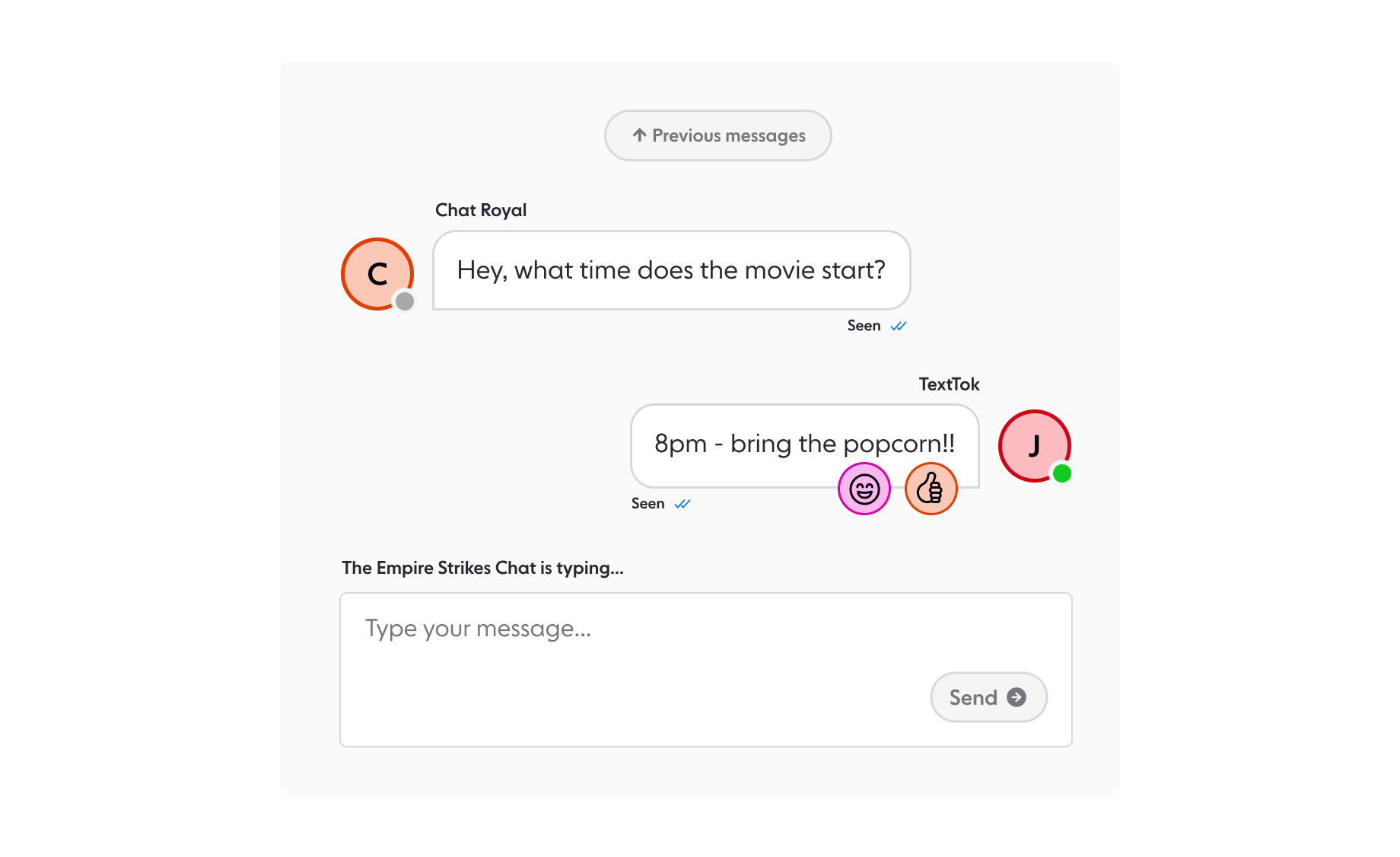

- Read receipts: Update your UI to show users when a message has been successfully sent, delivered, and read. You could also show if a message has been edited.

- Presence indicators: Give visibility into what others in the conversation are doing in realtime, like when they’re online or the last time they were active in the app.

- Message reactions: Offer the alternative for those in a hurry (or lacking a good response) of adding an emoji to a published message, like a heart or a thumbs up.

- App updates: Show what’s changed since the user was last active in the app, like how many unread messages they have, or how many times they’ve been mentioned in a thread.

The challenges of building an instant messaging or realtime chat app

It’s one thing to map out what to build for your chat app, but engineering a dependable chat system is a whole other ballgame. The time you spend battling code, uprooting issues, and juggling protocols can add up quickly—and time is money.

To help you better prepare, here’s an overview of the most common challenges.

Performance

This isn’t just about minimizing latency and bandwidth requirements to send data end-to-end, but also minimizing the variance in them. Too much variance can make messaging unpredictable and create an exasperating user experience. It can also make building and scaling features tricky since you wouldn’t know for certain whether they’ll perform as expected in varied operating conditions.

This is where a PaaS comes in handy. You’d know what median latency and throughput performance to expect, as well as the specific operating boundaries to design and build new features around.

TIP: Realtime messaging applications, especially those with interactive sessions like voice or video calls, are best experienced with latencies under 100ms.

Reliability

Ensuring reliability requires a stable, fault-tolerant architecture that has redundancy built in at a regional (and sometimes global) level. But maintaining a reliable distributed infrastructure comes at a high operating cost, and there’s a long list of capabilities (like global replication and automatic fail safes) that need to be developed.

A common approach is to pay for a PaaS that covers all your bases, unless you have the time and resources to build a fault-tolerant system.

Scalability

Architecting your chat app for scale takes more effort upfront since you need to spend more time on design before you build. You also have to make sure you have the funds to host, run, and maintain your app as it grows.

Partnering with a reputable PaaS can provide a highly elastic infrastructure so your app is never short on capacity and your team isn’t putting out operational fires at 3AM.

Cross-platform development

It’s no longer enough to develop your chat app for one lonely device. It now has to be accessible across various platforms and operating systems. Of course, the development time and technical challenges multiply with each additional platform, as you’ll need to navigate different SDKs and programming languages.

Luckily there are providers who offer SDKs targeting multiple platforms, so teams can develop using the languages and tools they already know.

Security and compliance

Security is top of mind for most users today, and messaging in particular is expected to be private and secure. Additionally, you’ll most likely need to meet compliance requirements (GDPR, SOC2) and any industry-specific frameworks, like HIPAA to protect patient privacy.

At a minimum, you need to encrypt data in transit and at rest, and implement robust authentication, as well as a permissions system.

Capabilities to build rich chat

There’s a lot more to chat once you’ve built out the basics. You also have to think about channels, emojis, read receipts, communicating user presence (online, offline, typing), and more. Presence is a particularly tricky feature to handle at scale.

These are many moving parts to develop and maintain in-house, so it’s a good idea to integrate APIs that already offer these additional features to let your team focus on core chat capabilities.

How to build a chat or instant messaging app

As we've outlined above, the path to building an instant messaging or realtime chat app can be fraught with challenges. The approach you should take depends on the timeline and budget for your project, the engineering resources you have available, as well as your ambitions.

Whilst most engineering teams can knock out an MVP (Minimal Viable Product) in around 3-5 months, with a budget in the range of $30,000 to $50,000 for a single platform (like Android), the offering will be fairly basic. According to AppInventive, building a chat app like WhatsApp can cost up to $250,000. And that’s just the initial cost to build and manage the system—there’s no telling how much you’ll spend over time with ongoing scaling, maintenance, and future enhancements.

Given that a lot of the challenges faced in building an instant messaging or chat app - performance, reliability, and scalability - are associated with the realtime infrastructure behind the chat app, a common fork in the road is deciding whether to build the infrastructure yourself or use a PaaS that does most of the work for you. Let’s dig into the two options.

Option 1: Building the realtime messaging infrastructure yourself

WebSockets are widely used as a key technology for enabling realtime, two-way communication for chat and instant messaging apps. You have the choice of building your own bespoke protocol on top of raw WebSockets, or you can use an open-source WebSocket library to build your chat app.

For example, Socket.IO is a popular WebSocket library that’s often used to develop chat apps for its ability to broadcast events to multiple clients, fallback options, and automatic reconnections. The downsides of Socket.IO are that it’s difficult to scale, has limited native security features, it’s designed to work in a single region, and doesn’t guarantee message ordering.

Keep in mind that whether you build your own protocol or use an open-source library, you have to host, scale, and manage the infrastructure yourself. T

his brings us to the next DIY option, which is to stitch together different cloud-based services that will host the servers for you. Even so, you still need to configure and optimize how the different services connecting your chat app should interact and operate.

Overall, the DIY approach is certainly doable, but most are barebones implementations that leave a lot for you to figure out on your own. They can prove challenging if you have a particular need to scale, or have a limited workforce to do so. In our survey of over 500 engineering leaders in the UK and US, we discovered that:

- 93% of projects required at least 4 in-house engineers to build the realtime messaging infrastructure.

- 10.2 person-months was the average time taken to complete a realtime messaging infrastructure project in-house.

- 46% said escalating costs as a major challenge during the development of their realtime messaging infrastructure.

- 50% of self-built realtime messaging platforms required $100K-$200K a year in upkeep.

The reality is that building realtime infrastructure is hard and the timeline can be unpredictable. Plus, not every organization has the time, money, or expertise to build realtime infrastructure in-house. Even Adobe figured it would be easier to buy Figma for $20bn than build realtime multiplayer collaboration into their products.

Option 2: Leveraging a PaaS for your instant messaging or chat app infrastructure

If you’re looking to deliver a production-ready chat or instant messaging app without the complexity of building everything yourself, a PaaS is your best route.

For example, you could enlist an app development platform like Google-owned Firebase to build, test, release, and monitor web and mobile chat apps. However, it can be limiting when it’s time to scale and build features beyond a simple MVP. Not to mention vendor lock-in, as it restricts you to Google’s ecosystem.

Another option is to choose a flexible PaaS, like Ably, which is a serverless WebSocket PaaS that helps developers build scalable, realtime experiences—like chat—without the hassle of managing the underlying infrastructure.

The case for using a PaaS gets stronger the deeper you dig into the challenges of building and maintaining the infrastructure. In addition to the statistics above, our survey revealed that:

- 60% switched to PaaS to improve live UX with stable infrastructure.

- 56% ranked risk reduction as the second most compelling reason to swap to a PaaS.

- 55% said the third critical reason to move to a PaaS was to redeploy engineers into core product work.

- 65% of DIY solutions had an outage or significant downtime in the last 12-18 months.

The bottom line is: if you want to get your instant messaging or chat app to market sooner and grow it quickly, you’re better off using a PaaS that takes care of the heavy lifting and can guarantee predictable performance at scale.

Simplifying your instant messaging and realtime chat app development with Ably

Given the potential challenges involved in building a reliable, realtime messaging app infrastructure, it’s understandable why companies such as Hubspot choose to deliver their chat functionality using Ably.

Ably provides a highly-reliable and globally-scalable infrastructure for realtime messaging delivered over WebSocket-based APIs. In addition, Ably offers a set of flexible capabilities for building rich chat features: user presence, message interactions, history & rewind, push notifications, user authentication, and automatic reconnections with continuity.

There’s no complex infrastructure to provision, manage, or scale. And, we provide guarantees and SLAs across performance, data integrity, reliability, and availability. With Ably, you can count on:

- Predictable performance: A low-latency and high-throughout global edge network, with median latencies of <65ms.

- Guaranteed ordering & delivery: Messages are delivered in order and exactly once, even after disconnections.

- Fault tolerant infrastructure: Redundancy at regional and global levels with 99.999% uptime SLAs.

- High scalability & availability: Built and battle-tested to handle millions of concurrent connections at effortless scale.

- Optimized build times and costs: Deployments typically see a 21x lower cost and upwards of $1M saved in the first year.

Ably fits snugly in the heart of your architecture—between your frontend and backend—and provides managed integrations with other protocols and third-party services.

With the complexities of realtime infrastructure taken care of—and the freedom to adapt and build capabilities to suit your needs—you can deliver a live chat app 3x as fast without skimping on features. That lets you focus on your code and gives you the chance to give the big players a run for their money.

Chat app resources

- Ably’s chat apps reference guide

- Introducing the Fully Featured Scalable Chat App

- Build your own live chat web component with Ably and AWS

- Building a realtime chat app with Next.js and Vercel

- Building a chat app in React Native

- Building a realtime chat app with Laravel using WebSockets

- Scalable, dependable chat apps with Apache Kafka and Ably