Live chat is the most common type of realtime web experience. Embedded in our everyday lives, we use messaging platforms like WhatsApp and Slack daily to keep in touch with friends and family, and to communicate and collaborate at work. With live chat functionality also prominent across e-commerce, live streaming, and e-learning experiences, end users have come to expect (near) instant message receipt and delivery. Meeting these expectations requires a robust realtime messaging system that delivers at any scale.

In this blog post, we’ll explain what we mean by a realtime messaging system, before taking a deep dive into the challenges and best practice for scaling realtime messaging for chat use cases.

What is realtime messaging?

Realtime messaging refers to a communication model where data is delivered, received, and consumed instantly or near-instantly (as soon as it becomes available). Implemented using low-latency, event-driven protocols like WebSockets, Server-Sent-Events, and MQTT, realtime messaging underpins a wide array of use cases such as:

- Broadcasting realtime event data, such as live scores and traffic updates.

- Facilitating multiplayer collaboration on shared projects and whiteboards.

- Delivering push notifications and alerts.

- Keeping your backend and frontend in realtime sync.

- Adding realtime asset tracking capabilities to urban mobility and food delivery apps.

- And, of course, to power live chat experiences.

The huge demand for live chat

As live chat has become a communication channel that users expect, businesses are seeing it drive engagement and retention. As a result, it’s likely important to become even more popular and widespread over the coming years. By adding a live chat feature to a website or application, a business can:

- Provide faster customer support: Live chat resolves queries 13x faster than email or online forms.

- Deliver a better customer experience: The average customer satisfaction rate for live chat support is 83.1%. Realtime support via chat helps businesses quickly address concerns and smooth out any bumps in the customer journey.

- Increase sales, revenue, and customer loyalty: Customers that use live chat spend 60% more per purchase. With a direct channel for targeted messaging and personalized deals, it makes sense that live chat can boost a company’s conversion rate by 3.87%.

- Increase customer loyalty: An eMarketer survey found that 63% of customers were more likely to return to a website that offers live chat. Glassix found that a luxury brand using WhatsApp saw a 58% increase in customer retention.

If you’re planning to build a stand-alone chat app or to add chat functionality to your app, you will inevitably have to consider scalability. Your chat app should be engineered so that it’s able to reliably serve a volatile, constantly changing number of people - between tens and potentially millions of concurrent users, some “chattier” than others.

The challenges of scaling realtime messaging for chat use cases

There are countless complexities, trade-offs, and common pitfalls involved in building scalable realtime messaging systems. Here, we’ll focus on some of the key, high-level challenges you will face when building a realtime messaging system that’s able to provide reliable, seamless, and uninterrupted experiences to chat users at scale.

Ensuring message delivery across disconnections

All messaging systems will experience client disconnections. What’s important is ensuring that when this happens, data integrity is preserved. That is making sure no message is lost, delivered multiple times, or out of order — particularly as your system scales and the volume of disconnects grows. Here are some best practices for preserving data integrity:

- Ensure disconnected clients can reconnect automatically, without any user action. The best way to do this is to exponentially increase the delay after each reconnection attempt, increasing the wait time between retries to a maximum backoff time. This gives time to add capacity to the system so it can deal with the reconnection attempts that might happen simultaneously. When deciding how to handle reconnections, you should also consider the impact that frequent reconnect attempts have on the battery of user devices.

- Ensure data integrity by persisting messages somewhere, so they can be re-sent if needed. This means deciding where to store messages and how long to store them.

- Keep track of the last message received on the client side. To achieve this, you can add sequencing information to each message (e.g. a serial number to specify position in an ordered sequence of messages). This enables the backlog of undelivered messages to resume where it left off when the client reconnects.

Additionally, you will likely need capabilities like message deduplication, idempotent operations (where possible), and acknowledgments (ACKs) for message delivery.

Read more about how to ensure data integrity across disconnections.

Achieving consistently low latencies

Low-latency data delivery is the cornerstone of any realtime messaging system. The average human reaction time is approximately 250ms, and most people perceive a response time of 100ms as instantaneous. This means that messages delivered in 100ms or less will be received in realtime from a user perspective.

However, ensuring predictable low latency when you’re building a system at scale is no easy feat since it’s impacted by a range of factors, notably:

- Network congestion.The more devices and users, the more data is being transferred, which can lead to bottlenecks and delays.

- Processing power. As the number of connections and messages increases, so does the processing power needed to handle them. If the system is not equipped to handle a large number of chat connections simultaneously, latency can increase.

- Distance. The longer the distance, the greater the delay due to the time it takes for data to travel back and forth.

To achieve low latency, you need the ability to (dynamically) increase the capacity of your server layer and reassign load. This way, you can ensure there’s enough processing power to prevent your servers from being slowed down or even overrun.

You should also consider using an event-driven protocol designed and optimized for low-latency communication, such as WebSocket.

Compared to some alternatives, like HTTP long polling, WebSockets eliminate the need for a new connection with every request, drastically reducing the size of each message (no HTTP headers). This helps save bandwidth and decreases overall latency, making WebSockets a better fit for realtime messaging in general. The trade-off is that stateful WebSocket is arguably harder to scale than HTTP, as it relies on a persistent connection between client and server.

Learn more about the differences between WebSocket and HTTP long polling.

Dealing with volatile demand

Any system that’s accessible over the public internet should expect to deal with an unknown (but potentially high) and quickly changing number of users.

For example, if you offer a commercial chat solution in specific geographies, you want to avoid being overprovisioned globally by scaling up only when you would expect to see high traffic in specific geographies (during working hours) and down during other times. For global businesses, this might be at different times of the day as you “follow the sun” to work out when different users are likely to be active.

But regardless of when you expect high traffic, you need to be able to account for unexpected out-of-hours activity. Therefore, to operate your messaging service cost-effectively, you need to scale up and down dynamically, depending on load, and avoid being overprovisioned at all times. Ensuring your realtime messaging system can handle this involves two key things:

- Scaling the server layer

At first glance, vertical scaling seems attractive, as it's easier to implement and maintain than horizontal scaling – especially if you’re using a stateful protocol like WebSockets. However, vertical scaling has some serious practical limitations:

- Single point of failure. If your server crashes, there’s the risk of losing data, and your app will be severely affected.

- Technical ceiling. You can scale only as far as the biggest option available from your cloud host or hardware supplier (either way, there’s a finite capacity).

- Traffic congestion: During high-traffic periods, the server has to deal with a huge workload, which can lead to issues such as increased latency or dropped packets.

- Less flexibility: Switching out a larger machine will usually involve some downtime, meaning you need to plan for changes in advance and find ways to mitigate end-user impact.

In contrast, horizontal scaling is a more dependable model since you are able to protect your system’s availability using other nodes in the network if a server crashes or needs to be upgraded.

The downside with horizontal scaling is the complexity that comes with having an entire server farm to manage and optimize, plus a load-balancing layer. You’ll have to decide on things like:

- The best load-balancing algorithm for your use case (e.g., round-robin, least-connected, hashing).

- How to redistribute load evenly across your server farm — including shedding and reassigning existing load during a scaling event.

- How to handle disconnections and reconnections.

Read more about horizontal vs vertical scaling and the associated challenges

If you need to support a fallback transport, it adds to the complexity of horizontal scaling. For example, if you use WebSocket as your main transport, then you need to consider if users will connect from environments where they might not be available (e.g., restrictive corporate networks and certain browsers). If they will, then fallback support (e.g., for HTTP long polling) will be required. When handling fundamentally different protocols, your scaling parameters change since you need a strategy to scale both. You might even need to have separate server farms to handle WebSockets vs. HTTP traffic.

2. Architecting your system for scale

Since it’s hard to know how many chat users might be using your app at any given time, you should architect your realtime messaging system based on a pattern designed for scale. One of the most popular and dependable choices is the publish/subscribe (pub/sub) pattern.

Pub/sub provides a framework for exchanging messages between any number of publishers and subscribers. Both publishers and subscribers are unaware of each other. They are decoupled by a message broker that groups messages into channels (or topics). Publishers send messages to channels, while subscribers receive messages by subscribing to them.

You can use pub/sub for one-to-one messaging (e.g. private chat between two users) and for one-to-many messaging (useful for multi-user chat channels, rooms, and groups). Its decoupled nature helps ensure your apps can theoretically scale to limitless subscribers. As long as the message broker can scale predictably, you shouldn’t have to make other changes to deal with unpredictable user volumes.

That being said, pub/sub comes with its complexities. For any publisher, there could be one, many, or no subscriber connections listening for messages on the same channel. If you’re using WebSockets and you’ve spread all connections across multiple frontend servers as part of your horizontal scaling strategy, you now need a way to route messages between your own servers, such that they’re delivered to the corresponding frontends holding the WebSocket connections to the relevant subscribers.

Making your system fault tolerant

When you’re trying to build scalable, production-ready live chat experiences for thousands or even millions of chat users, you need to think about the fault tolerance of the underlying realtime messaging system.

Fault-tolerant systems assume that component failures will happen and ensure that the system has enough redundancy to continue operating. The larger the system, the more likely failures are — and the more important fault-tolerance becomes.

To make your system fault-tolerant, you’ll need to make sure that it’s redundant against any kind of failure (software, hardware, network, or otherwise). This includes things like:

- Having the ability to elastically scale your server layer.

- Operating with extra capacity on standby.

- Distributing your infrastructure across multiple regions (sometimes entire regions do fail, so to provide high availability and superior uptime guarantees, you shouldn’t rely on any single region).

While necessary, implementing fault-tolerant mechanisms and distributing the infrastructure across multiple regions creates complexity around preserving data integrity (guaranteed message ordering and delivery).

Check out our article, Engineering a fault-tolerant distributed system, to get an idea of the complexities involved.

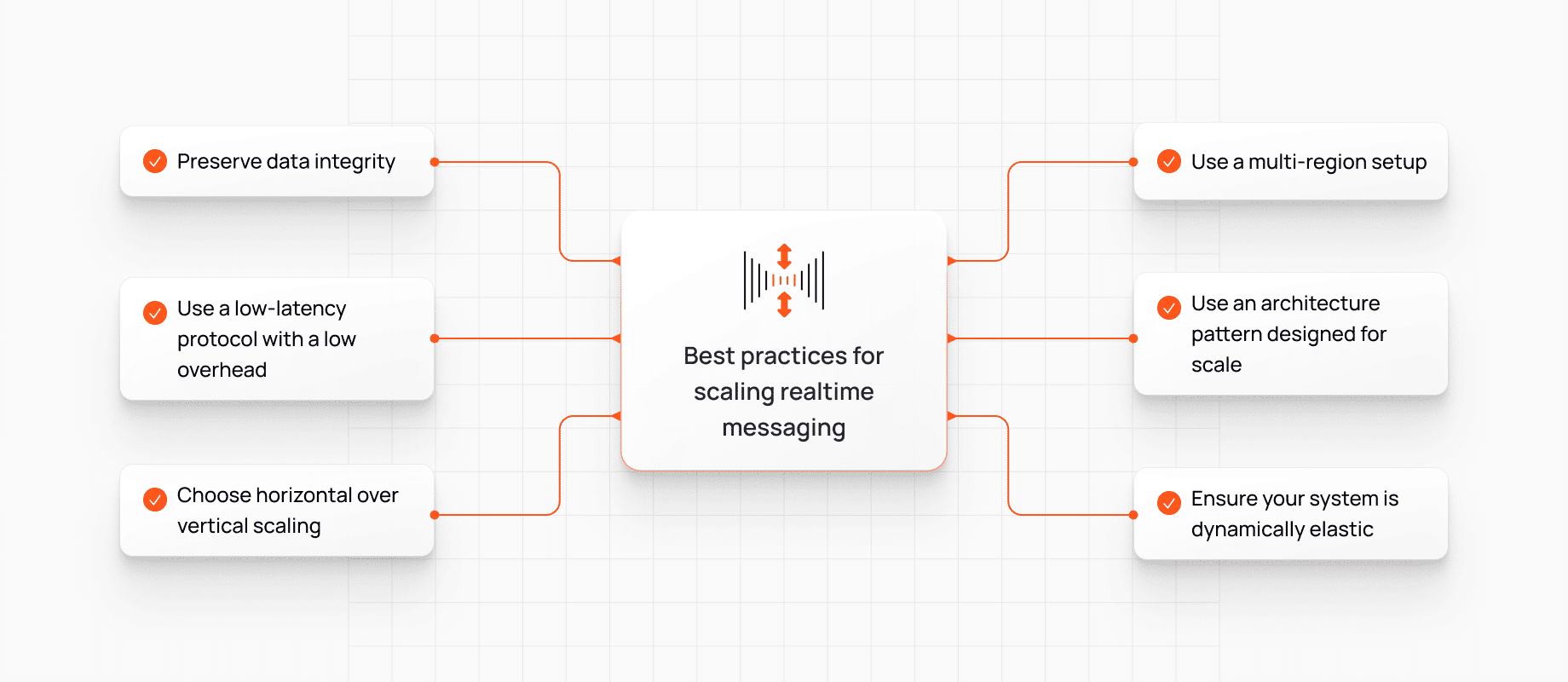

Best practices for scaling realtime messaging

Given the challenges associated with scaling realtime messaging, it’s critical to make the right choices upfront to ensure your chat system is dependable at scale. Here are the best practices for scaling your realtime messaging infrastructure:

- Preserve data integrity with mechanisms that allow you to enforce message ordering and delivery (preferably exactly-once) at all times.

- Use a low-latency protocol with a low overhead like WebSocket that’s designed and optimized for low-latency communication.

- Choose horizontal over vertical scaling. Although more complex, horizontal scaling is a more available model in the long run as, if a server crashes or needs to be upgraded, you are in a much better position to protect your system’s overall availability by distributing the workload of the failed machine to the other nodes in the network.

- Use a multi-region setup. A globally-distributed, multi-region setup puts you in a better position to ensure consistently low latencies, and avoid single points of failure and congestion.

- Use an architecture pattern designed for scale like pub/sub pattern, which provides a framework for exchanging messages between publishers and subscribers.

- Ensure your system’s dynamically elastic. The ability to rapidly (automatically) add more capacity to your realtime messaging infrastructure to deal with spikes, and then dispose of it when it’s no longer needed, is key to handling the ebb and flow of traffic.

And ultimately, prepare for things to go wrong. Whenever you engineer a large-scale realtime messaging system, something will fail eventually. Plan for the inevitable by building redundancy into every layer of your realtime infrastructure.

Should I build or buy my realtime messaging solution?

On the surface, building your own realtime messaging system to power live chat experiences offers:

- Total control. Building in-house allows you to engineer a solution that is tailor-made to meet your specific requirements. And, if you have the engineering expertise and capacity to deliver it, you can take both the product and technical decisions to deliver the functionality you need.

- Choice of tech stack. Whether open-source tech or cloud-based infrastructure, you can select the tooling that meets both your budget and your development team’s preferences.

In practice though, delivering a scalable realtime messaging system is fraught with engineering complexities. In addition to what we’ve discussed in this article, the following resources give a clear picture of what’s involved in building and scaling realtime messaging for chat use cases in-house:

- What it takes to build a realtime chat or messaging app

- The challenges of scaling a chat system with Socket.IO

The time and cost implications of building your own realtime messaging service

Whether it’s to power live chat or another type of realtime experience, building and maintaining a realtime messaging system in-house – especially one you can trust to deliver at scale is expensive, time-consuming, and requires significant engineering effort.

We surveyed over 500 engineering leaders to surface the challenges they faced when building realtime messaging infrastructure in-house found that:

- The average time to build basic realtime messaging infrastructure is 10.2 person-months.

- More than 90% of engineering leaders said their realtime messaging projects required between four and ten engineers.

- A typical self-built realtime messaging platform costs around $100K-$200K a year to maintain.

In addition, you also need to factor in the time, money, and effort required to ship chat features like user presence (online/offline status), typing indicators, emoji reactions, or read receipts.

Reduce time to market with a managed third-party provider

Research by McKinsey shows that shipping a product six months late can reduce profitability by a third. If you don’t have the time and resources required to manage your realtime messaging system in-house, using a managed third-party solution such as a PaaS can help you to build and launch realtime features faster at a reduced cost, with less operational overhead.

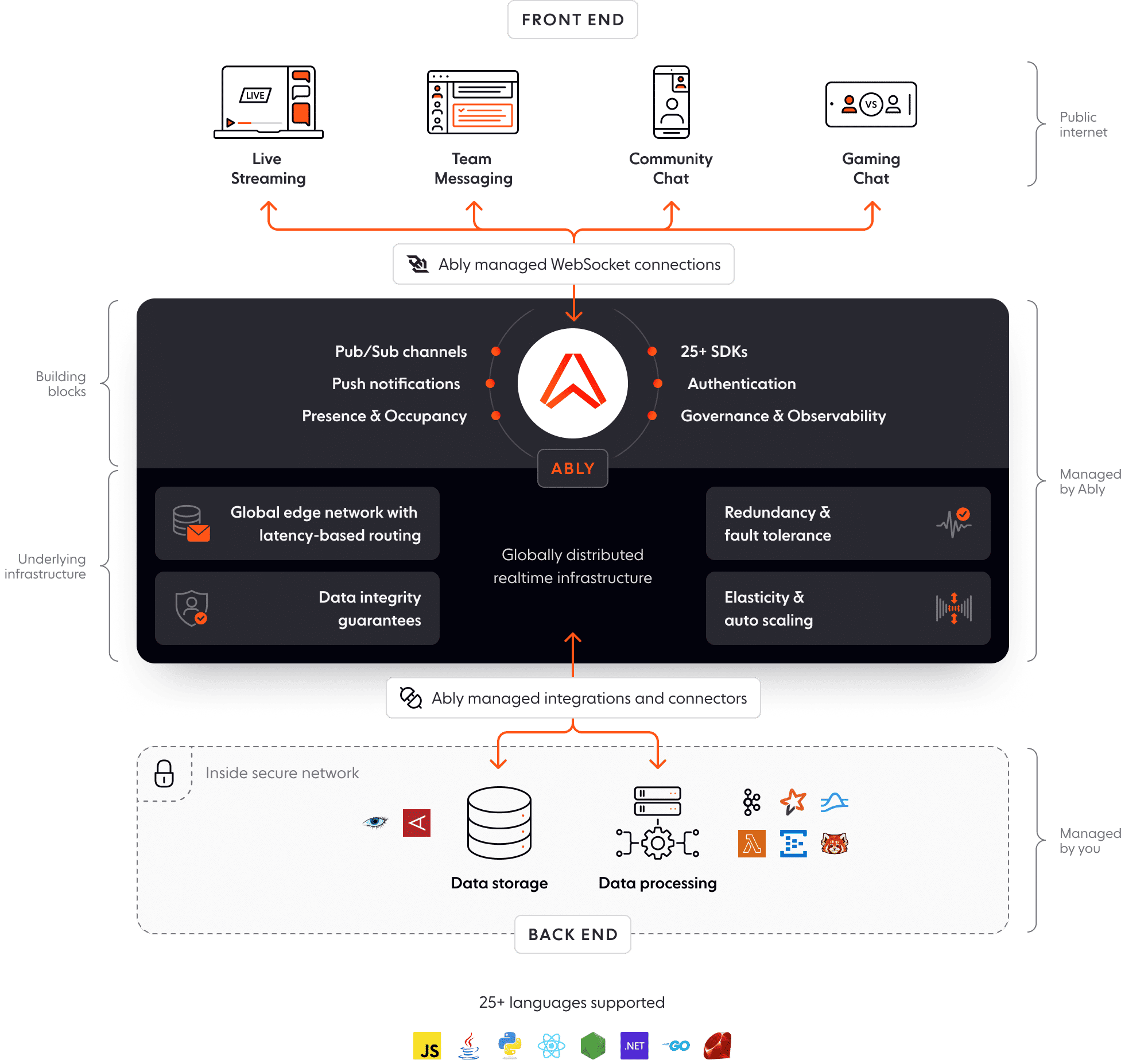

Scale messaging for live chat use cases effortlessly with Ably

Ably is a realtime experience infrastructure provider. Our APIs, SDKs, and managed integrations help developers build and deliver realtime experiences without having to worry about maintaining and scaling messy realtime messaging infrastructure.

Ably offers:

- A globally-distributed network of seven data centers and 385 edge acceleration points of presence.

- Client SDKs for every major programming language and development platform.

- Pub/sub APIs with rich features, such as message delta compression, multi-protocol support (WebSockets, MQTT, Server-Sent Events), automatic reconnections with continuity, presence, and message history.

- Guaranteed message ordering and delivery.

- Global fault tolerance and a 99.999% uptime SLA.

- < 65ms round-trip latency (P99).

- Elastic scalability to handle up to millions of concurrent clients, and a constantly fluctuating number of connections and channels.

Companies like HubSpot and Guild use Ably’s realtime messaging capabilities to ship outstanding chat experiences. Compared to building their own realtime messaging system, Ably customers typically get to market three times faster, with an average $938,000 return on investment in the first year of using our platform.

Get started with a free Ably account