It’s not an exaggeration to say that Hypertext Transfer Protocol (HTTP) made the Internet as we know it. HTTP was originally proposed as an application protocol in 1989 by Tim Berners-Lee, inventor of the World Wide Web. The first documented version, HTTP/0.9, was called the one-line protocol. Given that it spawned the World Wide Web, it could now be described as the greatest one-liner ever written.

As our demands on the Internet grew, so too did HTTP as a network protocol have to evolve to deliver acceptable performance. HTTP/2 marked a major overhaul, and was the first new standardization since that provided by HTTP/1.1 in 1997 (RFC 2068). This article describes how HTTP/2 was designed to overcome the limitations of HTTP/1.1, what the new protocol has made possible, how HTTP/2 works, and what its own limitations are.

HTTP/2 Client-Server over a single TCP connection.

From humble origins – A brief history of HTTP

That “one-line protocol” of HTTP/0.9 consisted of requests: the method GET followed by the document address, an optional port address, and terminated with a carriage return and a line feed. A request made up of a string of ASCII characters. Only HTML files could be transmitted. There were no HTTP headers, status codes or error codes.

The first stage of evolution had to come quickly.

HTTP/1.0 – Getting past the limitations

To overcome the severe limitations of HTTP/0.9, browsers and servers modified the protocol independently. Some key protocol changes:

Requests were allowed to consist of multiple header fields separated by newline characters.

The server sent a response consisting of a single status line.

A header field was added to the response. The response header object consisted of header fields separated by newline characters.

Servers could respond with files other than HTML.

These modifications were not done in an orderly or agreed-upon fashion, leading to different flavours of HTTP/0.9 in the wild, in turn causing interoperability problems. To resolve these issues, the HTTP Working Group was set up, and in 1996, published HTTP/1.0 (RFC 1945). It was an informational RFC, merely documenting all the usages at the time. As such HTTP/1.0 is not considered a formal specification or an Internet standard.

Code example of request/response for HTTP/1.0

Request from a client with the GET method

GET /contact HTTP/1.0

Host: www.ably.io

User-Agent: Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)

Accept: text/*, text/html, text/html;level=1, */*Accept-Language: en-usRequest from a client with the POST method

POST /contact HTTP/1.0

Host: www.ably.io

User-Agent: Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)

Accept: text/*, text/html, text/html;level=1, */*Accept-Language: en-us

Content-Length: 27

Content-Type: application/x-www-form-urlencoded200 OK response

HTTP/1.0 200 OK

Date: Wed, 24 Jun 2020 11:26:43 GMT

Content-Type: text/html; charset=utf-8

Connection: close

Server: cloudflareHTTP/1.1 – Bringing (some) order to the chaos

By 1995, work had already begun to develop HTTP into a standard. HTTP/1.1 was first defined in RFC 2068, and released in January 1997. Improvements and updates were released under RFC 2616 in May 1999.

HTTP/1.1 introduced many feature enhancements and performance optimizations, including:

Persistent and pipelined connections

Virtual hosting

Content negotiation, chunked transfer, compression and decompression, transfer encoding, and caching directives

Character set and language tags

Client identification and cookies

Basic authentication, digest authentication, and secure HTTP

Code example of request/response for HTTP/1.1

Request from a client with the GET method

GET /contact HTTP/1.1

Host: www.ably.io

User-Agent: Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)

Accept: text/*, text/html, text/html;level=1, */*Accept-Language: en-usRequest from a client with the POST method

POST /contact HTTP/1.1

Host: www.ably.io

User-Agent: Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0)

Accept: text/*, text/html, text/html;level=1, */*Accept-Language: en-us

Content-Length: 27

Content-Type: application/x-www-form-urlencoded200 OK Response

HTTP/1.1 200 OK

Date: Wed, 24 Jun 2020 11:59:14 GMT

Content-Type: text/html; charset=utf-8

Transfer-Encoding: chunked

Server: cloudflareThe growing problems with HTTP/1.1

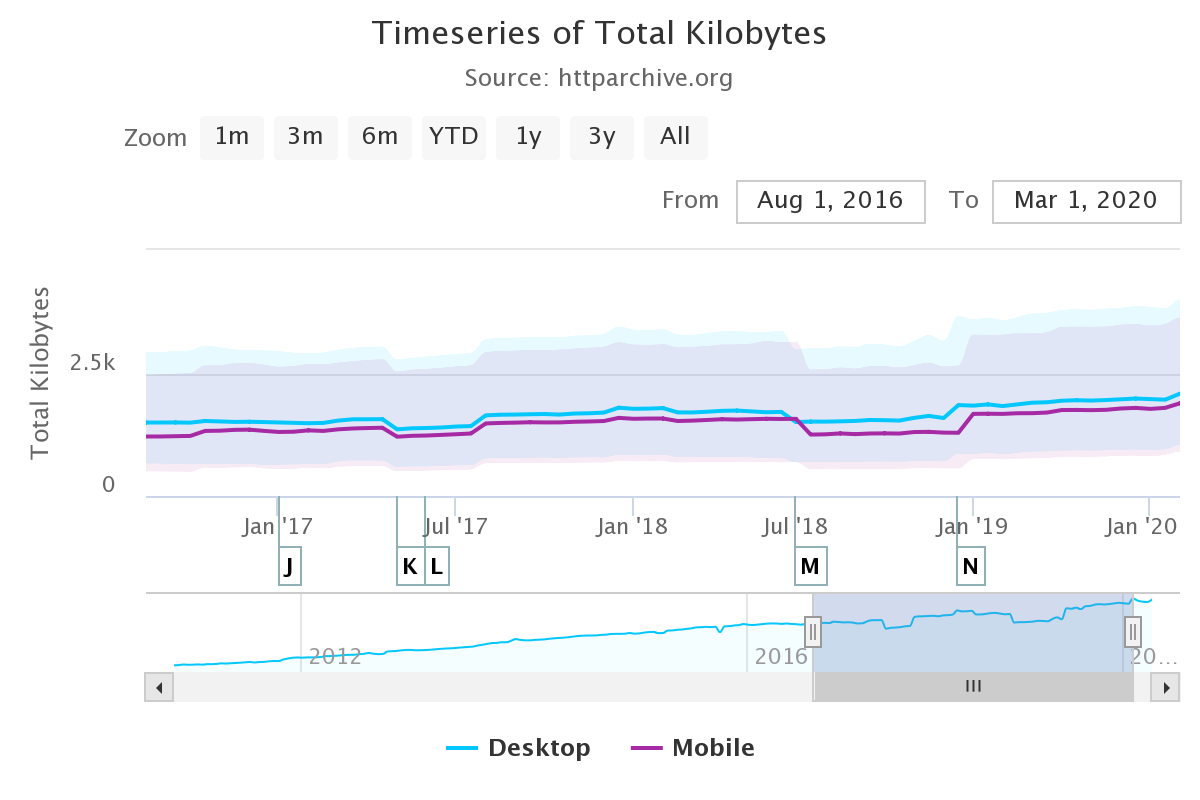

Use of the Internet grew steadily during the years of the new millennium, underpinned by HTTP/1.1. Meanwhile, websites kept steadily growing bigger and more complex. This graph from the State of the Web report shows a 30.6% increase in median size of desktop websites between November 2016 and November 2017, and a 32.3% increase in mobile versions of these websites.

Website size increase in the year after November 2016 begins to push HTTP's abilities, with load times testing users' patience. Source: HTTP Archive: State of the Web.

Users do not take kindly to slow-loading websites. By November 2019, the median time for first contentful paint (FCP) for sites visited from desktop computers was 2.4 seconds. For mobile devices, the median time for FCP was 6.4 seconds. To keep a reader’s attention uninterrupted, FCP should be under one second.

Much of the lag was down to the limitations of HTTP/1.1. Let’s look at some of these limitations and developer hacks for overcoming them.

Head-of-line blocking

Head-of-line blocking increases website response time when a sequence of packets is held up because one or more packets are blocked. A client sends a request to a server in HTTP/1.1, connecting over Transmission Control Protocol (TCP), and the server must return a complete response before the connection can be used again. Subsequent requests will not be able to use the TCP connection until the response is completed.

Responses 2 and 3 are blocked until response 1 is retransmitted and delivered. Adapted from 10.5 Exploring Head-of-Line Blocking.

At first, browsers were allowed only two concurrent connections to a server, and the browser had to wait till one of them was free, creating a bottleneck. The workaround of allowing browsers to make six concurrent connections only postponed the problem.

Developers started using domain sharding to split content across multiple subdomains. This allowed browsers to download more resources at the same time, which made the website load faster. This came at a cost of increased development complexity and increased overhead from the TCP connection setup.

Pipelining

Pipelining is a technique in which clients send multiple requests to the server without waiting for a response. However, the server must respond to the requests in order, and both client and server have to support it for it to work.

A large or slow response can break pipelining by blocking the responses behind it. Pipelining was challenging to implement because many intermediaries and servers didn’t process it correctly.

Pipelining in HTTP/2 prevents head-of-line blocking performance problems. Adapted from HTTP/2 vs HTTP/1 - Which is Faster?

Protocol overheads – Repetitive headers & cookies

HTTP is a stateless protocol, and each request is independent. Server and client exchange additional request and response metadata. Every HTTP request initiated by the browser carries around 700 bytes of metadata. Servers use cookies for session management. With a cookie added to every request, protocol overhead increases.

The workaround was developers using “cookieless” domains to serve static files like images, CSS, and JavaScript that didn't need cookies.

TCP slow start

TCP slow start is a feature of the protocol in which the network is probed to figure out the available capacity. HTTP over TCP does not use full bandwidth capacity from the start. Small transfers are expensive over TCP. To overcome the TCP slow start, developers used hacks like concatenating JavaScript & CSS files, and spriting small images.

Evolution of HTTP/2

Simplicity was a core principle of HTTP. However, implementation simplicity came at the cost of performance. HTTP/1.1 has many inherent limitations, as we saw in the previous section. Over time these limitations became too much and HTTP needed an upgrade.

In 2009, Google announced SPDY, an experimental protocol. This project had the following high-level goals:

Target a 50% reduction in page load time.

Minimize deployment complexity.

Avoid the need for any changes to content by website authors.

Bring together like-minded parties interested in exploring protocols as a way of solving the latency problem.

In November 2009, Google engineers announced that they had achieved 55% faster load times. By 2012 SPDY was supported by Chrome, Firefox, and Opera.

The HTTP Working Group took notice of this and initiated an effort to take advantage of the lessons of SPDY. In November 2012, a call for proposals was made for HTTP/2, and SPDY specification was adopted as the starting point.

Over the next few years, HTTP/2 and SPDY co-evolved, with SPDY as the experimental branch. HTTP/2 was published as a Proposed Standard in May 2015 (RFC 7540).

Under the hood: A description of HTTP/2

At a high level, HTTP/2 was designed to resolve the issues of HTTP/1.1. Let’s take a look at how HTTP/2 works. An important aspect to remember is HTTP/2 is an extension of, not a replacement for HTTP/1.1. It retains the application semantics of HTTP/1.1. Functionality, HTTP methods, status codes, URIs, and header fields remain the same.

Structure of HTTP/2 messages

HTTP/2 has a highly structured format with HTTP messages formatted into packets (called frames) with each frame assigned to a stream. HTTP/2 frames have a specific format, including length, declared at the beginning of each frame and several other fields in the frame header.

In many ways, the HTTP frame is similar to a TCP packet. Reading an HTTP/2 frame follows a defined process: the first 24 bits are the length of this packet, followed by 8 bits which define the frame type, and so on.

After the frame header comes the payload. This could be HTTP headers or the body. These also have a specific format, known in advance. An HTTP/2 message can be sent in one or more frames.

In contrast, HTTP/1.1 is an unstructured format consisting of lines of ASCII text. Ultimately, it’s a stream of characters rather than being specifically broken into separate pieces/frames (apart from the lines distinction).

HTTP/1.1 messages (or at least the first HTTP Request/Response line and HTTP Headers) are parsed by reading in one character at a time, until a new line character is reached. This is messy as you don’t know in advance how long each line is.

In HTTP/1.1, the HTTP body’s length is handled slightly differently, as it is typically defined in the Content-Length HTTP header. An HTTP/1.1 message must be sent in its entirety as one continuous stream of data and the connection cannot be used for anything but transmitting that message until it is completed.

Every HTTP/2 connection starts as HTTP/1.1 and the connection upgrades if the client supports HTTP/2. HTTP/2 uses a single TCP connection between the client and the server, which remains open for the duration of the interaction.

HTTP/2 design features

HTTP/2 introduced a number of features designed to improve performance:

Replacement of the textual protocol with a binary one. A binary framing layer creates an interleaved communication stream.

Full multiplexing instead of ordered and blocking (which means it can use one connection for parallelism).

Flow control to ensure that streams are non-blocking.

Stream prioritization to tackle problems caused by chopping up and multiplexing of multiple requests and responses.

Header compression to reduce overhead. See Header compression for details.

Server push. Proactive “push” responses from servers into client caches.

Binary framing layer

The binary framing layer is responsible for all performance enhancements in HTTP/2, setting out the protocol for encapsulation and transfer of messages between the client and the server.

Binary framing layer added to the application layer in HTTP/2. Adapted from Introduction to HTTP/2.

The binary framing layer breaks the communication between the client and server into small chunks and creates an interleaved bidirectional stream of communication. Thanks to the binary framing layer, HTTP/2 uses a single TCP connection that remains open for the duration of the interaction.

Let’s look at some terminology used in HTTP/2 before moving on.

HTTP/2 terminology

Frame. A frame is the smallest unit of communication in HTTP/2. A frame carries a specific type of data, such as HTTP headers or payloads. All frames begin with a fixed 9 octet header. The header field contains, amongst other things, a stream identifier field. A variable-length payload follows the header. The maximum size of a frame payload is 2^24 - 1 octets.

Message. A message is a complete HTTP request or response message. A message is made up of one or more frames.

Stream. A stream is a bidirectional flow of frames between the client and the server. Some important features of streams are:

A single HTTP/2 connection can have multiple concurrently open streams. The client and server can send frames from different streams on the connection. Streams can be shared by both the client and server. A stream can also be established and used by a single peer.

Either endpoint can close the stream.

The order of frames on a stream is important. Receivers process frames in the order they are received. The order of headers and data frames has semantic significance.

An integer identifies streams. The identifying integer is assigned by the endpoint which initiated the stream.

Multiple connection open streams in HTTP/2 showing header and data frames.

Full multiplexing

The binary framing layer solves the problem of head of line blocking, making HTTP/2 fully multiplexed. As we have mentioned, the binary framing layer creates an interleaved bi-directional flow of streams from the “chunks” of information sent between the client and server.

The client or the server can specify the maximum number of concurrent streams the other peer can initiate. A peer can reduce or increase this number at any time.

Flow control

At this point, you must be wondering why multiplexing over a single TCP connection won’t result in head-of-line blocking.

HTTP/2 uses a flow-control scheme to ensure that streams are non-blocking. Flow control is simply an integer that indicates the buffering capacity of a server, a client, or an intermediary (like a proxy server). Flow control is used for individual streams and the connection as a whole. The initial value for the flow-control window is 65,535 octets for both new streams and the overall connection.

A peer can use the WINDOW_UPDATE frame to change the value of octets it can buffer. Server, clients, and intermediaries can independently advertise their flow-control window and abide by the flow-control windows set by their peers.

Flow control in HTTP/2 controls size of streams to prevent head-of-line blocking.

Stream prioritization

HTTP/2 protocol allows multiple requests and responses to be chopped into frames and multiplexed. The sequence of transmission becomes critical for performance. HTTP/2 uses stream prioritization to tackle this issue. The client creates a prioritization tree using two rules:

A stream can depend on another stream. If a steam is not given an explicit dependency, then it depends on the root stream. Streams have a parent-child relationship.

All dependent streams may be given an integer weight between 1 and 256.

Stream weights and stream dependencies in HTTP/2. Adapted from Introduction to HTTP/2.

Parent streams are delivered before their dependent streams. Dependent streams of the same parent should get resources proportional to their weights. However, dependent streams that share the same parent are not ordered with respect to each other.

A new level of dependency can be added by using the exclusive flag. The exclusive flag makes the stream the sole dependent of its parent stream. Lastly, stream priorities can be changed using the PRIORITY flag.

Header compression

HTTP requests contain headers and cookies data, which add performance overhead. HTTP/2 uses the HPACK compression format to compress request and response metadata. Transmitted header fields are encoded using Huffman coding. HTTP/2 requires the client and the server to maintain and update an indexed list of previously-seen header fields. The indexed list is used as a reference to encode previously transmitted values efficiently.

Server push

Server push is a performance feature that allows a server to send responses to an HTTP/2-compliant client before the client requests them. This feature is useful when the server knows that the client needs the ‘pushed’ responses to process the original request fully.

Server push has the potential to improve performance by taking advantage of idle time on the network. However, server push can be counterproductive if not implemented correctly.

Pushing resources that are already in the client’s cache waste bandwidth. Wasting bandwidth has an opportunity cost because it could be used for relevant responses.

Cache Digests for HTTP/2 is a proposed standard that addresses this issue. In the meantime, the problem can be tackled by pushing on the first visit only and using “cache-digest”.

Pushed resources compete with the delivery of the HTML, adversely impacting page load times. Developers can avoid this by limiting the size of assets pushed.

Code example of running an HTTP/2 server with Node.js

You may need to update the Node.js version for the HTTP/2 module to be available.

One important thing to take in consideration is that currently no browser supports HTTP/2 without encryption, which means that we will need to configure our server to work over a secure connection (HTTPS).

Most modern frameworks and servers now support HTTP/2. Here is a simple example of spinning up an HTTP/2 web server using Node.js:

const http2 = require('http2');

const fs = require('fs');

function onRequest (req, resp) {

resp.end("Hello World");

}

const server = http2.createSecureServer({

key: fs.readFileSync('localhost-privkey.pem'),

cert: fs.readFileSync('localhost-cert.pem')

}, onRequest);

server.listen(8443);After running the code, open a web browser of your choice with HTTP/2 support. Then, access the following URL: https://localhost:8443/stream.

Differences between HTTP/2 and HTTP/1.x

| HTTP/1.x | HTTP/2 |

|---|---|

| Text protocol with an unstructured format made up of lines of text in ASCII encoding. | Binary protocol. HTTP messages formatted into packets (called frames) and where each frame is assigned to a stream. HTTP/2 frames have a specific format, including length, declared at the beginning of each frame and various other fields in the frame header. |

| Can be without encryption | Mostly encrypted. The HTTP/2 protocol does not force encryption, but it's required by most clients. |

| Content negotiation, the process of selecting the best representation for a given response when there are multiple representations available can be server-driven or agent-driven (or “transparent”. Adds overhead. | Negotiation happens through an “Upgrade” HTTP header that is sent by the client. The negotiation can also happen during the TLS handshake using ALPN. If the server supports HTTP/2 it responds with a "101 Switching" status and from then on it speaks HTTP/2 on that connection. This costs a full network round-trip, but the upside is that an HTTP/2 connection should be possible to keep alive and re-use to a larger extent than HTTP1 connections generally are. Even assuming HTTP/2 over TLS, for the purposes of web development, this might as well be a requirement of the protocol. |

| Uses pipelining for multiplexing | Uses streams for multiplexing. Streams can be prioritized, re-prioritized, and canceled at any time. Streams have individual flow control and can have dependencies. It is more efficient than pipelining used in HTTP/1.1. |

-

Comparison of flow in non-pipelined, pipelined, and multiplexed streams. Adapted from LoadMaster - HTTP/2.

Note that:

HTTP/1.x headers are transmitted in plain text, but HTTP/2 compresses headers.

Servers can push content to the client that has not been asked by the client.

In HTTP/1.1, the client cannot retry a non-idempotent request when an error occurs. Some server processing may have occurred before the error, and a reattempt can have an undesirable effect. HTTP/2 has mechanisms for providing a guarantee that a request has not been processed.

TCP head of line blocking can occur with HTTP/2, but as the rightmost diagram above (for multiplexed HTTP) shows, response 2 can complete before response 1. Consider response 1 as a large media file spanning HTTP/2 data frames, while response 2 is a small amount of text that fits in a single HTTP/2 frame. The client will receive the first frame of response 1 before the first frame of response 2, but HTTP/2 multiplexes the frames and the client doesn't have to wait for the other 99 frames of response 1 before it gets the first (and only) frame of response 2. Response 2 then completes whilst response 1 is still being sent.

Implementing HTTP/2

Currently, all browsers support HTTP/2 protocol over HTTPS. For serving an application over HTTP/2, the web server must be configured with an SSL certificate. You can quickly get up and running with a free SSL certificate using Let’s Encrypt.

All major server software supports HTTP/2:

Apache Web Server version 2.4.17 or later supports HTTP/2 via mod\_http2 without the need for adding patches. It can be downloaded from: https://httpd.apache.org/download.cgi#apache24. Version 2.4.12 supports HTTP/2 via mod\_h2. However, patches must be applied to the source code of the server.

NGiNX versions 1.9.5 and above support HTTP/2. It has supported Server Push since version 1.13.9. Download latest, stable, and legacy versions from: http://nginx.org/en/download.html

Microsoft IIS supports HTTP/2 in Windows 10 and Windows Server 2016.IIS is included in Windows 10 but is not turned on by default. Use the link Turn Windows Features on and off in the Control Panel, and look for Internet Information Services. See also iis.net.

Gotchas: Design considerations of HTTP/2

HTTP/2 allows the client to send all requests concurrently over a single TCP connection. Ideally, the client should receive the resources faster. On the flip side, concurrent requests can increase the load on the servers. With HTTP/1.1, requests are spread-out. But with HTTP/2, servers can receive requests in large batches, which can lead to requests timing out. The issue of server load spiking can be solved by inserting a load balancer or a proxy server, which can throttle the request.

Most browsers limit the number of concurrent connections to a given origin (a combination of scheme, host, and port), and HTTP/1.1 connections must be processed in series on a single connection. This means HTTP/1.1 browsers effectively limit the number of concurrent requests to that origin, meaning their user’s browser throttles requests to their server and keeps their traffic smooth.

Server support for HTTP/2 prioritization is not yet mature. Software support is still evolving. Some CDNs or load balancers may not support prioritization properly.

The HTTP/2 push feature can do more harm than good if not used intelligently. For example, a returning visitor may have a cached copy of files, and the server should not push resources. Making servers push cache-aware solves this problem. However, cache-aware push mechanisms can get complicated.

HTTP/2 has addressed the HTTP/1.1-level head of line blocking problem. However, packet-level head-of-the line blocking of the TCP stream can still block all transactions on the connection.

Alternatives to HTTP/2

The following technologies can be used as alternatives to HTTP/2 in its standard form in order to enhance performance for realtime usage.

WebSockets

Built on top of HTTP/1.1, WebSockets provide fully bi-directional communication. The WebSocket protocol elevates the possibilities of communication over the Internet and allows a truly realtime web.

WebSockets are a thin transport layer built on top of a device’s TCP/IP stack. The intent is to provide what is essentially an as-close-to-raw-as-possible TCP communication layer to web application developers while adding a few abstractions to eliminate possible friction that would otherwise exist with how the web works.

WebSockets allow for a higher amount of efficiency compared to REST because they do not require the HTTP request/response overhead for each message sent and received. When a client wants ongoing updates about the state of the resource, WebSockets are generally a good fit.

See Ably topic WebSockets - A Conceptual Deep-Dive for more details and WebSockets vs HTTP for a comparison between the two frameworks.

MQTT (Message Queuing Telemetry Transport)

An application-layer protocol widely used in IoT projects. Its suitability comes from its small size, low power usage, minimized data packets, and ease of implementation.

MQTT is a network protocol especially built to enable efficient communication to and from Internet of Things (IoT) devices. This is because of its small size, low power usage, minimized data packets, and ease of implementation.

As well as this, unlike HTTP, MQTT does not follow the request/response mechanism for communication. Instead, it uses the publish-subscribe paradigm (pub/sub).

See Ably topic MQTT: A Conceptual Deep-Dive for more details.

Server-Sent Events (SSE)

SSE is an open, lightweight, subscribe-only protocol for event-driven data streams. SSE provides streaming events over HTTP/1.1 and HTTP/2 as a layer on top of HTTP.

SSE connections can only push data to the browser (hence “server-sent”). Good examples of applications that could benefit from SSE are online stock quotes and social media service Twitter’s updating timeline.

Ideally, when requesting data from a server, a simple XMLHttpRequest will do. However, in scenarios where you’d want to keep the connection open using XHR streaming, this brings its own set of overheads, including handling parsing logic and connection management.

In such situations you could use SSE instead. SSE provides a layer of connection management and parsing logic, allowing us to easily keep the connection open while a server pushes new events to the client as they become available.

In practice everything that can be done with SSE can also be done with WebSockets. However, use of WebSockets can be overkill for some types of application, and the backend could be easier to implement with a protocol such as SSE.

Another possible advantage of SSE is that it can be polyfilled into older browsers that do not support it natively using just JavaScript.

See Ably topic Server-Sent Events (SSE): A Conceptual Deep Dive for more details.

HTTP/3

HTTP/3 is a new iteration of HTTP, which has been in development since 2018 and, even though it has not been standardized at the time of writing, some browsers are already making use of it.

The aim of HTTP/3 is to provide fast, reliable, and secure web connections across all forms of devices by straightening out the transport-related issues of HTTP/2. To do this, it uses a different transport layer network protocol called QUIC, which runs over User Datagram Protocol (UDP) Internet protocol instead of TCP, which is used by all previous versions of HTTP.

See Ably topic HTTP/3 Deep Dive for more details or read HTTP/2 vs HTTP/3: A Comparison.

Conclusion

HTTP/2 is optimized for speed, which makes for a better user experience. Using HTTP/2 can also lead to developer happiness because it is no longer necessary to use hacks like domain sharding, asset concatenation, and image spriting. This reduces development complexity.

HTTP/2 makes web browsing even easier and faster compared to its predecessor. It has lower bandwidth usage, meaning that HTTP/2 is finally able to make full use of the latest developments in IT from over the past 16 years that HTTP1.1 could not.

HTTP/2 is focused on performance, particularly at the user-end. Also there is no need for many of the tricks that were developed to bypass problems from older versions.

HTTP/2 is designed for web traffic, and specifically around the needs of serving web content. However, for realtime communication, specifically bi-directional communication between client and server, protocols like WebSockets and MQTT are better suited.

HTTP/2 and Ably

Ably is an enterprise-ready pub/sub messaging platform. We make it easy to efficiently design, quickly ship, and seamlessly scale critical realtime functionality delivered directly to end-users. Everyday we deliver billions of realtime messages to millions of users for thousands of companies.

We power the apps that people, organizations, and enterprises depend on every day like Lightspeed System’s realtime device management platform for over seven million school-owned devices, Vitac’s live captioning for 100s of millions of multilingual viewers for events like the Olympic Games, and Split’s realtime feature flagging for one trillion feature flags per month.

We’re the only pub/sub platform with a suite of baked-in services to build complete realtime functionality: presence shows a driver’s live GPS location on a home-delivery app, history instantly loads the most recent score when opening a sports app, stream resume automatically handles reconnection when swapping networks, and our integrations extend Ably into third-party clouds and systems like AWS Kinesis and RabbitMQ. With 25+ SDKs we target every major platform across web, mobile, and IoT.

Our platform is mathematically modeled around Four Pillars of Dependability so we’re able to ensure messages don’t get lost while still being delivered at low latency over a secure, reliable, and highly available global edge network.

Developers from startups to industrial giants choose to build on Ably because they simplify engineering, minimize DevOps overhead, and increase development velocity.

Further reading

See also the Medium article: Exploring http2 (Part 2): with node-http2 core and hapijs

Recommended Articles

WebSockets and Android apps - client-side considerations

Learn about the many challenges of implementing a dependable client-side WebSocket solution for Android.

Comparing Socket.IO and HTTP: Key differences and use cases

Discover the different features, performance characteristics and use cases for Socket.io - a realtime library, and the HTTP protocol in our comparison guide.

How to stream Kafka messages to Internet-facing clients over WebSockets

Kafka is used by a plethora of companies to power their realtime data streaming pipelines. Here, we explore how it can be used with WebSockets to stream data over the Internet.